Migration From GCP to AWS / Kubernetes Implementation

Executive Summary

As one of the fastest-growing startups, our client helps customers in Africa by saving up to 30% of their expenses on fresh produce and packaged consumer goods. Yet, such a social-oriented mission undeniably needs a robust technical foundation. In this regard, our client chose Matoffo as a reliable and experienced cloud development provider that can satisfy this request in a fast and client-centric manner. Subsequently, the fascinating mesh of our long-term experience and out-of-the-box approach to complex tasks led us to fruitful cooperation with the client, resulting in a flawless migration from GCP to AWS & Kubernetes implementation.

About the Customer

Aimed at reducing the cost of living in Africa, our client is a unique organization that serves 1000k customers in 25 neighborhoods in Nairobi. As one of the most relevant B2C eCommerce models for the urban African majority, the company enables customers to access lower prices, better quality items, and a more extensive range of products. While the organization supports local societies, it also hopes to utilize the power of modern technologies in order to lessen the burden of buying food for Kenyan buyers, many of whom are grappling with sky-rocketing food costs.

Customer Challenge

There is no doubt that it is awesome when the company, especially one that serves social goals, grows and expands its performance. Nevertheless, as the organization successfully fulfills its social mission, it also requires a stable digital foundation to change the world for the better with the help of an online environment. Since the company was hosted on Google cloud, these cloud computing services could no longer meet the growing client’s needs. Bearing this tendency in mind, our client decided to migrate to AWS in order to unleash the full potential this incredible cloud computing platform offers.

Why AWS

To put it briefly, AWS has a lot of services and native solutions that can fit into product development. More than that, AWS has significantly more services (and features within these services) than any other cloud provider. Well, it already sounds like a valid reason, doesn’t it? All in all, with such a wide array of highly available, fault-tolerant, and flexible aspects, AWS is a perfect choice when it comes to configuration setup.

Why Matoffo

Totally dedicated to building and supporting secure, reliable, and scalable AWS cloud-native solutions, Matoffo is a certified and reliable cloud development provider trusted by both the fastest-growing startups and largest enterprises. Yet, what makes us stand among the competitors? Our philosophy lies in building the most generic and solid infrastructure and avoiding any workarounds. At Matoffo, we believe that this may impact future scaling. That is why we always try to provide easy-to-use solutions that do not require weeks to understand them.

Matoffo Solution

Before initiating the development process, we always get to the core of the customer’s problem. For this reason, we figured out the goal of migration while also defining the benefits the end-user can get from it. Next, we moved to the second part: all kinds of estimations, including time, cost, and effort. Finally, we always consider the fact that migration typically requires a deep understanding of application dependencies, a bunch of services that applications require.

Actually, migration typically includes a database migration or migrations of any data as well. Last but not least, it is essential to remember the possible downtime time in case of data migration and service recreation. As far as it is really important for our clients to know all these points, we gathered all the information and started with diagrams & initial blueprints to figure out how future setups should look.

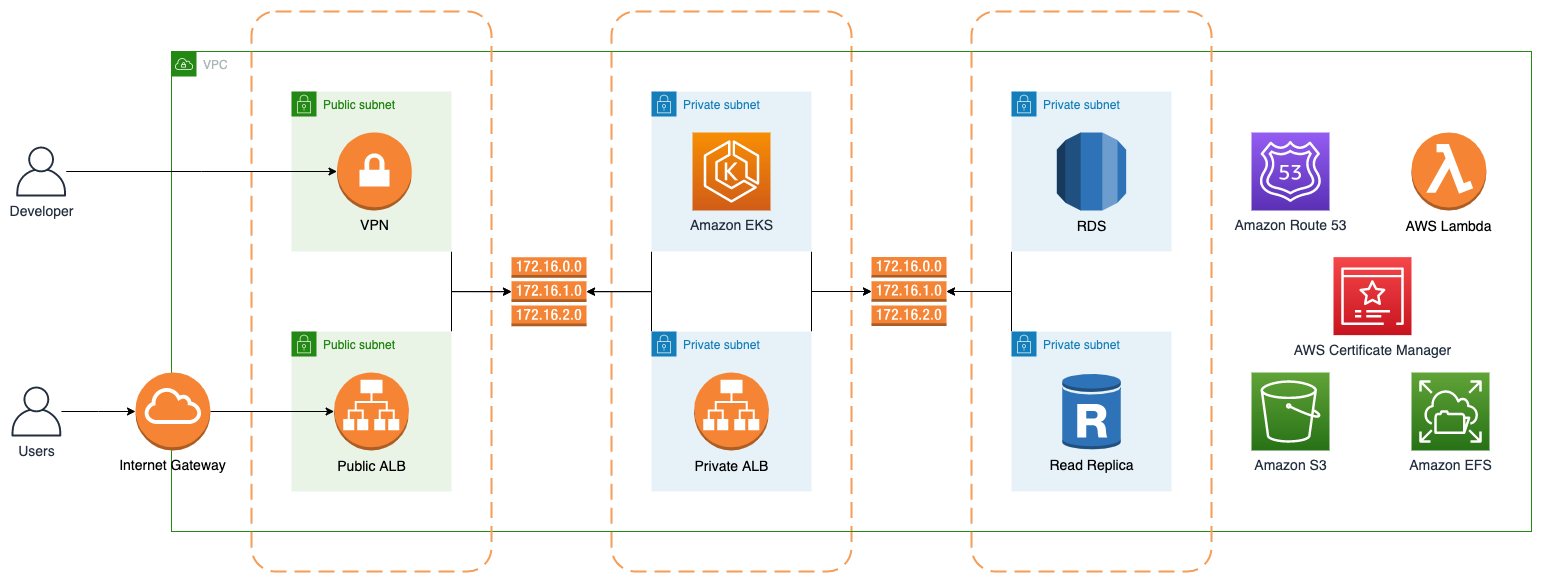

First, the initial part of any infrastructure, Virtual Private Cloud, is where all our components should live in AWS Cloud (see the picture “Network Diagram”).

Leveraging best-in-class practices, we had to spread our subnets across Availability Zones and make our EKS cluster and all compute resources completely private to prevent direct Internet access. To reach this crucial goal, we placed our EKS in private subnets so that all traffic goes through NAT gateways. Databases are isolated and only accessible within VPC as they need communication only with services inside VPC. Developers can get private access through the VPN that we provided as well.

Since the customer had previously hosted their microservices on Kubernetes, we decided not to move with the EKS solution but improve the existing one. As it was a general Kubernetes manifest, we agreed to move applications as a helm chart. This solution gives us the following advantages: faster and easier release and rollout/rollback processes for our applications.

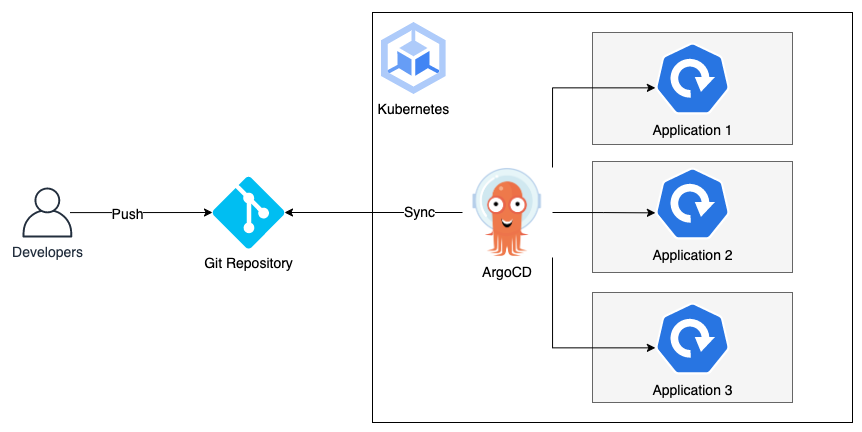

Here comes the most interesting part of the project — deploying our applications with no manual interruptions. We move forward with the GitOps approach because it allows us to apply Git as a source of trust and prevent any manual modifications. Besides, all Kubernetes manifests are located in a special repository that is dedicated just to all components that are related to Kubernetes.

As a GitOps management tool to deploy stuff, we decided to install ArgoCD. In fact, one of its best features is the ability to maintain a nice UI while configuring special access to developers with limited permissions so that they can view the status of applications or restart some components if needed.

For monitoring solutions, we provided Prom Stack that contains Prometheus/Grafana/AlertManager as the main block of the monitoring setup. In addition, we used Loki as a log-gathering system and connected CloudWatch as a data source to Grafana as well. As a result, we gained one point of view on all infrastructure and applications, so there is no need to switch between a bunch of tools to investigate some issues.

What is more, network traffic, a pretty important part of the cost optimization process, might also be large if you set up a connection between systems/services incorrectly. For example, suppose you have an external SAAS product like a CloudAMQP Kafka and would like to connect it to your services. Then, you should definitely choose VPC peering or AWS Private Link for that.

Finally, it is time for the production switch, which is considered the most complicated and dangerous part of the migration, as we should always ensure that our app is working fine. This process involved DevOps engineers, Developers, and QA Engineers in checking service. Still, everything should be fine if this process has been previously tested in pre-production environments.

To summarize all the major components that we used to create the final version of the product, we highlighted the following aspects:

AWS — the leading cloud provider for all components.

EKS — a native service to run Kubernetes applications, with the combination of Cluster Autoscaler, gives the ability to contain resources that are needed right now.

RDS — a service to host our databases for applications.

Route53 and ACM — tools designed to manage domains and certificates.

ExternalDNS and ALB Controller for EKS helped us to automatically provision new records as soon as a new service is deployed to the cluster.

ArgoCD — the main tool for covering the GitOps approach and service management.

Hashicorp Vault — a great tool to store all secret information related to applications (database credentials, application tokens, etc.)

Prometheus/Grafana/Alertmanager/Loki — all these tools work together to fit development requirements.

Kubernetes Diagram

Network Diagram

Business Value

At Matoffo, we always recognize the dual nature of our solutions. On the one hand, our projects are intended to perform their technical functions perfectly. Well, we undoubtedly reached this goal. On the other hand, we also consider the business perspective. From this point of view, we also succeeded. Ultimately, we managed to bring value in the following aspects:

Ability to Scale

This solution allows customers to scale quickly in case of new services or development cycles. All components were described by the IaC approach with no manual actions.

Time Efficiency

As we maintain a GitOps approach, developers are not wasting time debugging issues or writing CI/CD pipelines. In particular, engineers are working just on their applications and receiving immediate responses to deployments.

Cost Efficiency

As we implemented cluster autoscaling and a bunch of lifecycle rules for AWS services, we got a cost-effective setup. For non-production environments, we are using spot instances that may save up to 90% of computing costs.

Enhanced Security

Since before there was a gap in the security field, we followed the privilege principle among all our setups. Thanks to the IaC approach it requires much less time and gives the ability to make audit processes across all infrastructure.

Client's Feedback

The feedback we received from this incredible client cannot but please us. Absolutely satisfied with the outcome, the client is delighted to get such an exclusive, on-budget, and on-time solution that exceeded all their expectations. For our part, we also enjoyed working with such a great company, so we hope to continue cooperation in the future.

OUR FOCUS IS

CLOUD SOLUTIONS

Our primary goal is to deliver value to our clients by resolving technical challenges and helping them achieve their objectives. We utilize cloud solutions as a powerful toolset to make this happen. With Matoffo expertise, companies can significantly reduce the time from idea to market and rapidly scale their digital business. Additionally, Matoffo enables seamless adoption of rapidly growing tech capabilities, transforming businesses to stay competitive in the market.