In today’s cloud computing landscape, optimizing costs is a crucial aspect of any organization’s operational strategy. When it comes to Amazon Web Services (AWS), one of the most widely used and popular database services is Amazon DynamoDB. However, the benefits of DynamoDB come at a price, and it’s essential to understand the various pricing components and strategies for cost optimization.

Understanding Amazon DynamoDB Pricing

Amazon DynamoDB offers a flexible and scalable NoSQL database solution that is widely used by organizations of all sizes. With its ability to handle large amounts of data and provide fast and reliable performance, DynamoDB has become a popular choice for applications that require low latency and high throughput.

However, it’s important for users to understand the pricing structure of DynamoDB in order to optimize costs and ensure efficient resource allocation. By analyzing and optimizing each of the key components that contribute to the pricing model, organizations can effectively manage their DynamoDB costs.

Key Components of DynamoDB Pricing

The primary components that contribute to the DynamoDB pricing model include provisioned throughput, data storage, data transfer, and global secondary indexes.

Provisioned throughput refers to the read and write capacity units that users allocate to their DynamoDB tables. These capacity units determine the maximum amount of read and write operations that can be performed per second. By carefully analyzing the workload and adjusting the provisioned throughput, users can ensure that they are not over-provisioning or under-provisioning their tables, thus optimizing costs.

Data storage is another important component of DynamoDB pricing. Users are billed based on the amount of data stored in their tables, including both the actual data and any indexes created. It’s essential to regularly monitor and manage the data storage to avoid unnecessary costs.

Data transfer costs are incurred when data is transferred between DynamoDB and other AWS services or between different regions. By understanding and optimizing data transfer patterns, organizations can minimize these costs.

Global secondary indexes (GSIs) are additional indexes that users can create to improve the performance of their queries. However, it’s important to note that GSIs come with additional costs, both in terms of storage and provisioned throughput. Careful consideration should be given to the necessity and usage of GSIs to avoid unnecessary expenses.

Pricing Models for DynamoDB

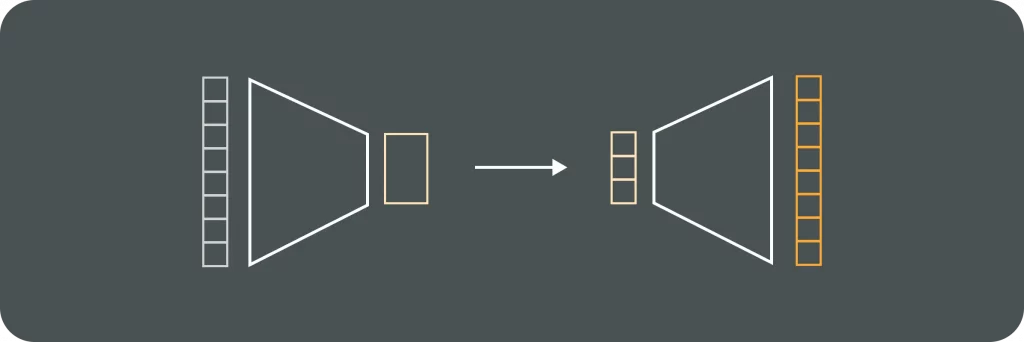

Amazon DynamoDB provides two pricing models: provisioned capacity and on-demand capacity.

Provisioned capacity requires users to define the required read and write capacity units beforehand. This model is suitable for applications with predictable workloads, where the capacity can be provisioned based on the expected traffic. By carefully analyzing the workload patterns and adjusting the provisioned capacity, organizations can optimize costs and ensure smooth performance.

On-demand capacity, on the other hand, allows for automatic scaling based on the workload. With this model, users do not need to specify the read and write capacity units in advance. Instead, DynamoDB automatically adjusts the capacity to handle the incoming traffic. This model is ideal for applications with unpredictable workloads or for those that require occasional bursts of traffic. However, it’s important to note that on-demand capacity is generally more expensive compared to provisioned capacity, especially for workloads with consistent traffic.

Choosing the most suitable pricing model is crucial for achieving cost optimization in DynamoDB. It requires a thorough understanding of the application’s workload patterns, performance requirements, and budget constraints.

In conclusion, understanding the various components and pricing models of Amazon DynamoDB is essential for optimizing costs and ensuring efficient resource allocation. By carefully analyzing and optimizing each component, organizations can effectively manage their DynamoDB costs while still benefiting from its flexible and scalable NoSQL database solution.

Strategies for DynamoDB Cost Optimization

Maximizing cost optimization starts with implementing effective strategies for DynamoDB. By following these strategies, organizations can leverage the benefits of DynamoDB while keeping expenses in check.

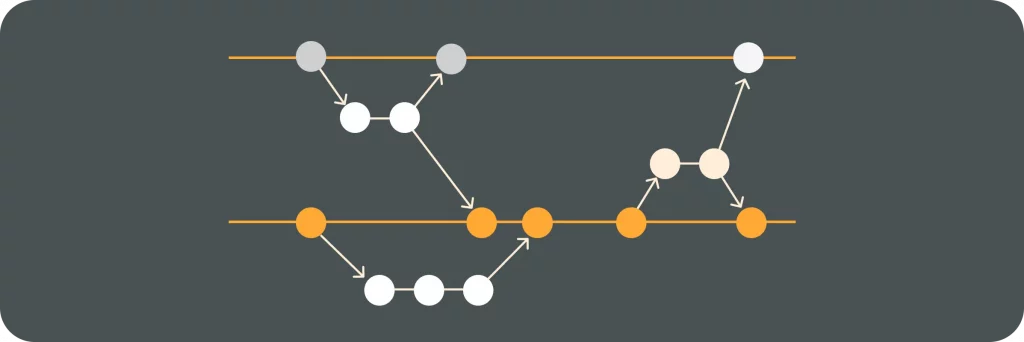

When it comes to DynamoDB cost optimization, efficient data modeling plays a critical role. Effective data modeling is a process that involves structuring data in a way that reduces storage costs and improves query performance. By utilizing DynamoDB features such as partitions, secondary indexes, and composite keys, organizations can achieve efficient data modeling.

Partitioning is a technique that allows for distributing data across multiple storage nodes, enabling parallel processing and improving scalability. By carefully selecting partition keys, organizations can evenly distribute the workload and avoid hot partitions, which can lead to increased costs and decreased performance.

Secondary indexes are another powerful feature of DynamoDB that can enhance query performance. By creating secondary indexes on frequently accessed attributes, organizations can reduce the need for expensive full table scans and improve the efficiency of queries.

Composite keys, on the other hand, allow for efficient querying of data based on multiple attributes. By combining multiple attributes into a single key, organizations can perform targeted queries without the need for additional indexes, resulting in cost savings.

In addition to efficient data modeling, effective capacity planning and management are crucial for cost optimization. Organizations need to continually monitor their workload and adjust provisioned throughput as necessary to ensure optimal performance while avoiding unnecessary costs.

One way to automate capacity planning and management is by utilizing AWS Auto Scaling. With Auto Scaling, organizations can set up policies that automatically adjust the provisioned throughput based on predefined metrics such as CPU utilization or request latency. This ensures that the system can handle fluctuations in workload without overprovisioning, resulting in cost savings.

DynamoDB’s provisioned throughput feature is another valuable tool for managing capacity. By specifying the desired read and write capacity units, organizations can allocate resources according to their workload requirements. This allows for fine-grained control over costs, as organizations only pay for the provisioned capacity they actually need.

In conclusion, by implementing efficient data modeling techniques and utilizing effective capacity planning and management strategies, organizations can optimize their DynamoDB costs. These strategies not only help reduce storage costs but also improve query performance, resulting in a more cost-effective and efficient DynamoDB implementation.

Leveraging DynamoDB Features for Cost Savings

Amazon DynamoDB offers several features that enable organizations to reduce costs while maintaining performance. Leveraging these features appropriately can lead to significant savings.

When it comes to cost savings, one of the key features offered by DynamoDB is the on-demand capacity mode. This mode allows for automatic scaling and eliminates the need for manual capacity planning. With on-demand capacity mode, resources are used efficiently as DynamoDB adapts to the workload in real-time. This not only ensures optimal performance but also reduces costs by eliminating the need for over-provisioning.

Another feature that organizations can leverage for cost savings is Auto Scaling. With Auto Scaling, DynamoDB can automatically adjust the provisioned throughput based on demand. This means that organizations no longer have to manually adjust capacity to accommodate fluctuating workloads. By dynamically scaling up or down, organizations can optimize performance while minimizing costs. This feature is particularly beneficial for applications with unpredictable or seasonal traffic patterns.

In addition to Auto Scaling, DynamoDB also offers provisioned throughput. With provisioned throughput, organizations can specify the desired read and write capacity units for their tables. This allows for fine-grained control over resource allocation, ensuring that the right amount of capacity is provisioned to meet the workload requirements. By accurately provisioning throughput, organizations can avoid over-provisioning and reduce unnecessary costs.

Furthermore, DynamoDB provides detailed monitoring and metrics that can help organizations optimize their resource usage and identify potential cost-saving opportunities. By analyzing these metrics, organizations can gain insights into their usage patterns and make informed decisions on capacity planning and optimization.

Overall, by leveraging the on-demand capacity mode, Auto Scaling, provisioned throughput, and utilizing the monitoring and metrics provided by DynamoDB, organizations can effectively reduce costs while maintaining optimal performance. These features not only eliminate the need for manual capacity planning and adjustments but also ensure that resources are used efficiently, resulting in significant cost savings.

Monitoring and Controlling DynamoDB Costs

Effectively monitoring and controlling costs is a crucial aspect of optimizing DynamoDB expenses. AWS provides tools and features that enable organizations to stay on top of their costs.

Using AWS Cost Explorer

AWS Cost Explorer provides a comprehensive view of DynamoDB costs, allowing organizations to analyze spending patterns and identify areas for improvement. By visualizing cost data, organizations can make informed decisions to optimize their DynamoDB costs.

Setting Up Cost Alerts

AWS allows users to set up cost alerts, which notify organizations when certain spending thresholds are reached. By proactively monitoring costs through alerts, organizations can take proactive steps to prevent excessive spending and optimize DynamoDB costs.

Optimizing DynamoDB Performance to Reduce Costs

Improving performance can have a direct impact on cost optimization. By optimizing DynamoDB performance, organizations can reduce costs while still maintaining high efficiency in their database operations.

Improving Read/Write Efficiency

Optimizing read and write operations is vital for improving performance and reducing costs. Efficiently utilizing DynamoDB features such as batch operations, caching, and parallel scans can help organizations achieve better read/write efficiency, leading to cost savings.

Utilizing DynamoDB Accelerator (DAX)

AWS DynamoDB Accelerator (DAX) is an in-memory caching service that can significantly improve database performance. By reducing the need for repeated access to underlying data storage, DAX reduces latency and improves cost efficiency.

By following these strategies and leveraging DynamoDB’s features and pricing models, organizations can effectively optimize costs without compromising on the performance and scalability offered by Amazon DynamoDB. Understanding and actively managing DynamoDB costs allows organizations to maximize the value of this powerful NoSQL database service while keeping expenses in check.