Cost Optimization for AWS Batch

In today’s competitive world, optimizing costs has become one of the top priorities for businesses seeking to maximize efficiency and maintain profitability. For enterprises relying on Amazon Web Services (AWS) Batch, cost optimization strategies are paramount to achieving long-term success. By understanding the intricacies of AWS Batch and implementing effective cost optimization techniques, organizations can significantly reduce their expenses without compromising on performance or reliability.

Understanding AWS Batch

Before delving into cost optimization, it is crucial to grasp the fundamental aspects of AWS Batch. AWS Batch is a fully managed service that enables developers, scientists, and engineers to efficiently process large amounts of data and run batch computing workloads. By dynamically provisioning the required resources and scheduling jobs, AWS Batch simplifies the management of batch computing so that users can focus on their core tasks rather than infrastructure management.

AWS Batch provides a scalable and flexible solution for handling batch computing workloads. It allows users to process large volumes of data, perform complex computations, and run parallel tasks without the need for manual resource management. With AWS Batch, users can easily scale their batch jobs to meet the demands of their workload, ensuring that processing is completed in a timely manner.

Key Features of AWS Batch

AWS Batch offers a range of features that make it a powerful tool for batch computing. These include job scheduling, automatic scaling, data transfer, and application lifecycle management.

The job scheduling feature allows users to define dependencies and priorities for their batch jobs, ensuring efficient execution. Users can specify the order in which jobs should be executed and define dependencies between jobs, enabling them to build complex workflows and ensure that tasks are completed in the correct sequence.

Automatic scaling provisions the necessary compute resources based on workload demands, enabling users to process large volumes of data quickly. AWS Batch automatically scales up or down the compute resources based on the number and size of the batch jobs, ensuring optimal resource utilization and minimizing costs.

Data transfer capabilities enable seamless movement of data into and out of batch jobs. AWS Batch provides efficient data transfer mechanisms that allow users to easily transfer large datasets to and from their batch jobs. This ensures that data is readily available for processing and analysis, without the need for manual data transfer processes.

Application lifecycle management simplifies the deployment and management of applications. With AWS Batch, users can easily package their applications and dependencies into containers, which can be executed as batch jobs. This simplifies the deployment process and ensures that applications run consistently across different environments.

How AWS Batch Works

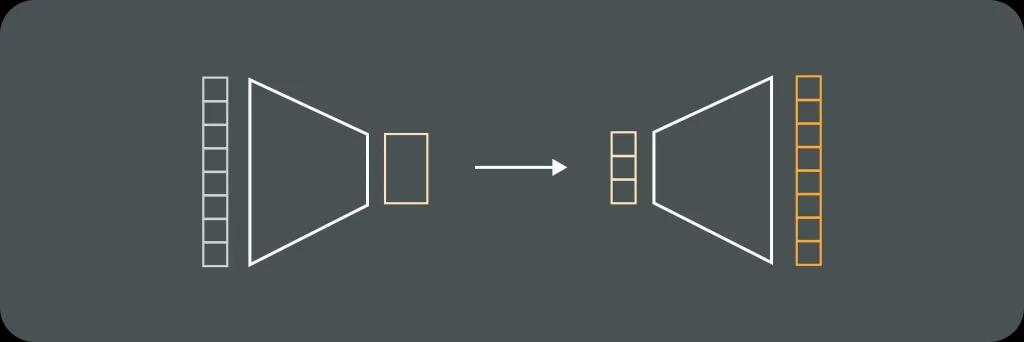

To understand how AWS Batch works, it is essential to grasp the concept of a batch job. A batch job refers to a single unit of work, such as data processing or computational tasks. AWS Batch executes these jobs by breaking them down into individual tasks and distributing them across a cluster of compute resources.

When a user submits a batch job to AWS Batch, the service automatically provisions the required compute resources based on the job’s resource requirements. These resources can be Amazon Elastic Compute Cloud (EC2) instances or AWS Fargate containers. AWS Batch ensures that the compute resources are properly allocated and optimized for the job’s execution.

Once the compute resources are provisioned, AWS Batch breaks down the batch job into individual tasks and distributes them across the compute resources. Each task is executed independently and in parallel, allowing for efficient processing of the batch job. AWS Batch monitors the progress of the tasks and ensures that they are completed successfully.

By distributing the tasks across multiple compute resources, AWS Batch enables users to take advantage of parallel processing, which can significantly reduce the time required to complete batch jobs. This parallel processing capability allows users to process large volumes of data quickly and efficiently.

After the batch job is completed, AWS Batch automatically releases the compute resources, ensuring that users only pay for the resources they actually used. This dynamic allocation and release of compute resources help optimize costs and improve resource utilization.

In summary, AWS Batch is a powerful tool for efficiently processing large amounts of data and running batch computing workloads. With its job scheduling, automatic scaling, data transfer, and application lifecycle management features, AWS Batch simplifies the management of batch computing and allows users to focus on their core tasks. By breaking down batch jobs into individual tasks and distributing them across compute resources, AWS Batch enables efficient and cost-effective execution of batch jobs.

Importance of Cost Optimization

While AWS Batch offers powerful capabilities for batch computing, it is crucial to optimize costs to avoid overspending and maximize return on investment. By implementing cost optimization strategies, businesses can allocate resources more efficiently and reduce unnecessary expenses, allowing them to allocate funds to other critical areas of their operations.

Benefits of Cost Optimization in AWS Batch

Cost optimization in AWS Batch provides several benefits for organizations. By fine-tuning resource allocation and utilization, businesses can significantly reduce their AWS bill without compromising performance. This results in substantial cost savings, enabling businesses to invest in further innovation and growth. Additionally, cost optimization allows organizations to scale their compute resources based on actual workload demands, ensuring optimal utilization and avoiding unnecessary expenses during periods of low activity.

Potential Risks of Not Optimizing Costs

Failing to optimize costs in AWS Batch can have significant repercussions for businesses. Overspending on compute resources can drain budgets and limit investments in other critical areas. Moreover, inefficient resource allocation can lead to underutilization or overutilization, impacting performance and causing potential delays or bottlenecks in job execution. To mitigate these risks, it is essential to adopt cost optimization strategies from the outset.

Strategies for AWS Batch Cost Optimization

To achieve cost optimization in AWS Batch, organizations can adopt several strategies aimed at maximizing efficiency and minimizing expenses. These strategies encompass choosing the right pricing model, efficient resource management, and utilizing spot instances.

Choosing the Right Pricing Model

Selecting the appropriate pricing model is a crucial aspect of cost optimization. AWS Batch offers two primary pricing models: On-Demand and Spot Instances. On-Demand instances provide flexibility and reliability but come at a higher cost per hour of usage. Spot Instances, on the other hand, offer significant cost savings but come with the risk of potential interruption if the market price exceeds the bid price. By analyzing their workload requirements and cost considerations, organizations can choose the most cost-effective pricing model for their AWS Batch jobs.

Efficient Resource Management

Efficient resource management is key to optimizing costs in AWS Batch. By analyzing computer resource utilization, organizations can identify idle or underutilized instances and take appropriate actions to modify or shut them down. Automation tools such as AWS Auto Scaling and AWS Lambda can help in dynamically provisioning and deprovisioning resources based on workload demands, ensuring optimal resource allocation at all times. Additionally, using AWS Resource Groups, businesses can apply tags to their resources and easily track and manage costs associated with different projects or departments.

Utilizing Spot Instances

Spot Instances can provide substantial cost savings for organizations using AWS Batch. By bidding on spare AWS compute capacity, businesses can access compute resources at significantly discounted prices. However, it is essential to have a robust fault-tolerant architecture in place as Spot Instances can be interrupted with a two-minute warning if the market price exceeds the bid price. Organizations can implement strategies such as spreading workloads across multiple Spot Instances and utilizing Spot Fleets to maximize cost savings while maintaining high availability and reliability.

Monitoring and Controlling AWS Batch Costs

Monitoring and controlling AWS Batch costs play a crucial role in optimizing expenses. AWS provides several tools and services that enable organizations to gain visibility into their spending and take proactive measures to control costs.

AWS Cost Explorer

AWS Cost Explorer is a comprehensive tool that provides in-depth insights into AWS usage and costs. It allows users to visualize and analyze their AWS spending patterns, identify cost drivers, and forecast future costs based on historical data. By leveraging AWS Cost Explorer, organizations can track their AWS Batch costs, compare it with budgets, and make informed decisions to optimize expenses.

AWS Budgets and Forecasts

AWS Budgets and Forecasts help organizations set cost thresholds and receive notifications when costs exceed predefined limits. By defining budgets at the account, project, or workload level, businesses can proactively monitor and control their AWS Batch costs. Budgets can be configured to send alerts via email or generate AWS Simple Notification Service (SNS) notifications. By acting on these alerts promptly, businesses can take corrective actions to stay within their allocated budget.

Implementing Cost Optimization

Implementing cost optimization in AWS Batch requires a systematic approach and adherence to best practices. By following a set of defined steps and leveraging industry best practices, organizations can effectively optimize expenses while ensuring optimal performance and reliability.

Steps to Optimize AWS Batch Costs

1. Analyze workload requirements: Begin by understanding the nature of your batch jobs and their resource needs. Determine the optimal compute capacity required for each job.

2. Choose the right pricing model: Consider the trade-offs between On-Demand and Spot Instances, and select the most suitable model based on cost and job criticality.

3. Implement resource management practices: Monitor resource utilization, identify idle instances, automate provisioning and deprovisioning, and apply tags for effective cost tracking.

4. Leverage Spot Instances strategically: Utilize Spot Instances while designing resilient and fault-tolerant architectures to maximize cost savings without sacrificing availability and reliability.

5. Continuously monitor and analyze costs: Leverage AWS Cost Explorer, set up budgets and forecasts, and regularly review your AWS Batch costs to identify areas for optimization and take timely corrective actions.

Best Practices for Cost Optimization

To further enhance cost optimization efforts, organizations can follow these best practices:

- Use managed services instead of self-managed infrastructure where possible to reduce operational overhead and benefit from built-in cost optimization features.

- Consider using AWS Savings Plans or Reserved Instances for predictable workloads to achieve additional cost savings.

- Regularly right-size instance types and adjust them based on workload demands to avoid overprovisioning or underutilization.

- Implement monitoring and alerting systems to detect and rectify sudden spikes in resource utilization, preventing unnecessary costs.

- Periodically review and optimize data transfer costs by compressing data or utilizing AWS Data Transfer Acceleration.

- Leverage AWS Trusted Advisor to gain real-time insights on cost optimization opportunities, security, performance, and fault tolerance.

By incorporating these best practices into their cost optimization strategy, organizations can achieve significant cost savings and maximize the value derived from their AWS Batch workloads. Balancing cost optimization with performance and reliability is crucial to ensure long-term success and sustained growth in the competitive landscape.

Your DevOps Guide: Essential Reads for Teams of All Sizes

Elevate Your Business with Premier DevOps Solutions. Stay ahead in the fast-paced world of technology with our professional DevOps services. Subscribe to learn how we can transform your business operations, enhance efficiency, and drive innovation.