LLMOps Infrastructure: What You Need

The rapidly evolving landscape of artificial intelligence has led businesses to explore effective operational frameworks for managing and deploying machine learning models. This framework is known as LLMOps (Large Language Model Operations). Understanding what LLMOps infrastructure entails is crucial for organizations seeking to enhance their efficiency and maintain a competitive edge.

Understanding the Basics of LLMOps Infrastructure

At the core of any successful AI initiative is its underlying infrastructure, which supports the operationalization of large-scale language models. LLMOps infrastructure not only encompasses the technologies and tools required but also the methodologies for integrating these models into business processes.

Defining LLMOps Infrastructure

LLMOps infrastructure refers to the comprehensive system that covers all aspects of developing, deploying, managing, and maintaining large language models. This includes hardware, software, and the workflows that enable organizations to efficiently utilize AI-driven applications.

It can be considered an extension of traditional MLOps, with a more specialized focus on large language models, which require unique processing capabilities and configurations. Organizations must invest in building a robust infrastructure that is tailored to their specific needs and use cases. This often involves selecting the right cloud providers, optimizing data pipelines, and ensuring that the necessary computational resources are available to handle the intensive workloads associated with training and deploying these models.

Importance of LLMOps in Modern Business

As businesses begin to adopt AI at an accelerated pace, LLMOps infrastructure becomes paramount. The significance lies in its ability to streamline operations, improve decision-making, and enhance customer experiences. Companies are increasingly reliant on accurate and timely insights derived from data, making LLMOps a crucial component of their overall strategy.

Moreover, LLMOps infrastructure helps organizations scale their AI initiatives effectively. As the demand for high-quality AI outputs rises, a solid foundation allows for organic growth and the accommodation of more complex models without a substantial increase in costs or resources. This scalability is essential in a landscape where businesses must remain agile and responsive to market changes. Additionally, the integration of LLMOps practices fosters collaboration between data scientists, engineers, and business stakeholders, ensuring that the insights generated by language models are aligned with strategic objectives and operational needs.

Furthermore, LLMOps infrastructure plays a vital role in maintaining the ethical use of AI technologies. By establishing guidelines and frameworks for model governance, organizations can ensure compliance with regulations and ethical standards, thereby mitigating risks associated with bias and data privacy. This proactive approach not only safeguards the organization but also builds trust with customers and stakeholders, reinforcing the importance of responsible AI deployment in today’s digital economy.

Key Components of LLMOps Infrastructure

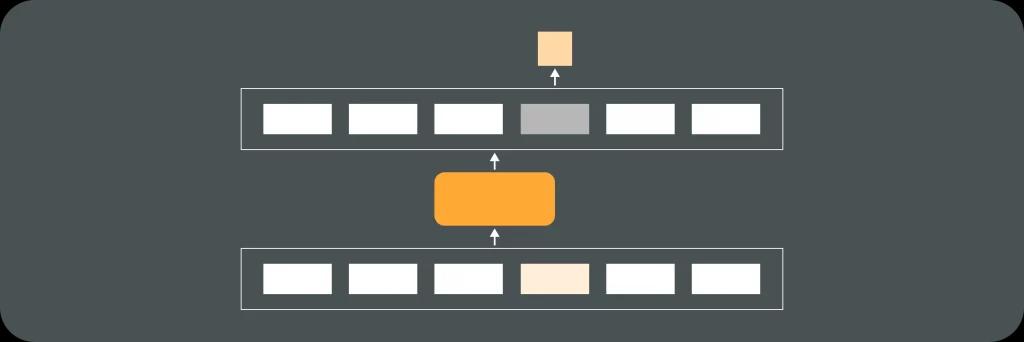

A successful LLMOps infrastructure is comprised of several essential components, each playing a specific role in the lifecycle of large language models. These components must work in unison to ensure that the system remains agile and responsive to changing business needs.

Data Processing Units

Data processing units, or DPUs, are critical as they form the backbone of the computational tasks required for training and inference of language models. These units leverage specialized architectures such as GPUs and TPUs that are designed to handle intensive data operations efficiently.

Investing in powerful DPUs is vital for organizations that aim to train complex models in a timely manner while also reducing costs associated with prolonged processing times. Furthermore, the choice of DPU can significantly influence the model’s performance, as different architectures may excel in various tasks, such as natural language understanding or generation. Organizations may also explore the use of distributed computing, where multiple DPUs work in parallel, enabling them to tackle larger datasets and more complex models, ultimately accelerating the training process.

Storage Systems

The storage system plays a significant role in LLMOps infrastructure by providing the necessary capacity for data management. Large language models rely on massive datasets for training, which necessitates an efficient and scalable storage solution to handle both structured and unstructured data.

Choosing between on-premise storage versus cloud-based solutions often depends on various factors including budget, scalability, and security requirements. A balanced approach might be integrating both to maximize efficiency. Additionally, organizations must consider data redundancy and backup strategies to ensure data integrity and availability. As models evolve and new data is generated, having a robust versioning system in place becomes crucial, allowing teams to track changes and revert to previous iterations if necessary, thus enhancing collaboration and innovation.

Networking Aspects

The networking components of LLMOps infrastructure determine how data flows between the different units, affecting overall system performance. High throughput and low latency networks are essential to connect DPUs and storage solutions seamlessly.

Organizations must also consider network security measures to protect sensitive data, which will be key when dealing with proprietary information and customer data used for training models. Implementing advanced encryption protocols and secure access controls can help safeguard data during transmission. Moreover, as the demand for real-time inference increases, optimizing network architecture to support edge computing can further enhance responsiveness, allowing models to process data closer to the source and reduce latency. This is particularly beneficial in applications such as chatbots or virtual assistants, where user experience hinges on immediate feedback and interaction.

Building Your LLMOps Infrastructure

Constructing an effective LLMOps infrastructure is a multi-step process that requires careful planning and execution. Organizations must lay a solid foundation that can support long-term AI initiatives.

Planning and Designing

Effective planning begins with assessing current organizational capabilities and identifying gaps. Establishing clear goals around AI usage will allow companies to design their infrastructure tailored to meet those objectives.

This phase should also focus on scalability, flexibility, and resilience, ensuring that the infrastructure can adapt to future advancements in technology or changes in business strategy. Additionally, organizations should consider the compliance and ethical implications of their AI strategies, as these factors can significantly influence design choices. By incorporating a framework for governance, companies can ensure that their AI systems operate within legal and ethical boundaries, fostering trust and accountability.

Implementation Process

The implementation of LLMOps infrastructure involves the selection of appropriate technologies and the integration of systems. This can include installing hardware, choosing software solutions for model management, and configuring networks to support efficient data flow.

A successful implementation will often require a dedicated team with expertise in AI deployment. Following best practices in this step can significantly reduce risks associated with operational disruptions. Moreover, it is crucial to establish a robust monitoring system to track the performance of AI models post-deployment. Continuous evaluation allows organizations to fine-tune their models, ensuring they remain effective and relevant in a rapidly evolving landscape. Training staff on new systems and processes is equally important, as it empowers teams to leverage the full potential of the LLMOps infrastructure, fostering a culture of innovation and adaptability within the organization.

Managing and Maintaining LLMOps Infrastructure

Sustaining LLMOps infrastructure over time is just as critical as the initial setup. This involves ongoing management and maintenance to ensure optimal performance and security.

Regular Monitoring and Updates

Organizations should invest in tools that enable continuous monitoring of their LLMOps infrastructure. By tracking key performance indicators, such as model accuracy and resource utilization, companies can address inefficiencies proactively.

Regular updates to both software and hardware components will also be necessary to keep up with advancements in AI technology and the evolving needs of the business. This includes not only updating algorithms and models but also ensuring that the underlying hardware is capable of supporting increased workloads as the organization scales. For instance, as more data is ingested and processed, the computational demands may shift, necessitating the adoption of more powerful GPUs or optimized cloud solutions to maintain performance levels.

Security Measures for LLMOps Infrastructure

With the increasing reliance on data-driven models, security becomes a top priority. Organizations must implement robust security measures to protect sensitive information stored within the infrastructure.

This includes using encryption protocols, access controls, and conducting regular security audits. Ensuring compliance with industry standards and regulations will help mitigate risks associated with data breaches and cyber threats. Furthermore, organizations should consider adopting a zero-trust security model, which assumes that threats could be both external and internal. This approach involves verifying every request as though it originates from an open network, thereby enhancing the overall security posture. Training employees on security best practices and fostering a culture of vigilance can also play a pivotal role in safeguarding the infrastructure against potential vulnerabilities.

Future Trends in LLMOps Infrastructure

The landscape of LLMOps is continuously evolving with several emerging trends that are set to reshape its infrastructure. Organizations need to stay informed to remain competitive in their respective markets.

Impact of AI and Machine Learning

As advancements in artificial intelligence and machine learning continue, they will directly influence the architecture of LLMOps infrastructure. The integration of innovative technologies like neural architecture search and meta-learning will enhance model training processes.

These developments will enable organizations to optimize their models more efficiently, potentially leading to better performance and lower operational costs.

The Role of Cloud Computing in LLMOps

Cloud computing is increasingly playing a vital role in easing the complexities of managing LLMOps infrastructure. By leveraging cloud services, organizations can scale their operations quickly and access high-performance computing resources without the need for substantial capital investments.

Additionally, a cloud-based approach enables enhanced collaboration and access to advanced tools that are continuously updated and improved to support AI development and deployment.

In conclusion, building and maintaining a robust LLMOps infrastructure is essential for organizations striving to harness the full potential of large language models. As the field continues to evolve, staying current with trends and best practices will ensure long-term success and sustainability.

Your DevOps Guide: Essential Reads for Teams of All Sizes

Elevate Your Business with Premier DevOps Solutions. Stay ahead in the fast-paced world of technology with our professional DevOps services. Subscribe to learn how we can transform your business operations, enhance efficiency, and drive innovation.