Reinforcement Learning Agents Explained

Reinforcement learning (RL) is a fascinating area of machine learning that focuses on how agents should take actions in an environment to maximize cumulative rewards. This article will explore the fundamental concepts of reinforcement learning agents, their architecture, types, learning processes, and the challenges they face.

Understanding the Basics of Reinforcement Learning

Before diving into the intricate workings of RL agents, it is essential to understand the fundamental concepts of reinforcement learning itself. At its core, RL is a trial-and-error learning mechanism where agents learn to make decisions by interacting with their environments.

Defining Reinforcement Learning

Reinforcement learning can be defined as a computational approach where agents learn to achieve a goal by taking actions in an environment and receiving feedback in the form of rewards or punishments. This feedback mechanism allows the agent to refine its decision-making strategies over time.

Unlike supervised learning, where the model is trained on labeled datasets, RL relies on the agent’s experience in the environment. Through countless interactions, the agent gradually learns which actions yield the most favorable outcomes. This process is often modeled using Markov Decision Processes (MDPs), which provide a mathematical framework to describe the environment, states, actions, and rewards. By leveraging MDPs, researchers can analyze the efficiency of various learning algorithms and predict how agents will behave in complex situations.

The Role of Agents in Reinforcement Learning

In the realm of reinforcement learning, an agent is any entity that can perceive its environment and take actions within it. The agent’s primary objective is to maximize the total cumulative reward over time. This necessitates a level of decision-making capability, often represented through policies that dictate how the agent behaves.

Agents can either be simple or incredibly complex, depending on the problem they are designed to solve. For instance, a basic agent might utilize a straightforward strategy such as Q-learning, where it learns the value of actions in given states. In contrast, more sophisticated agents may incorporate deep learning techniques, enabling them to process high-dimensional inputs like images or complex game environments. Regardless of complexity, all reinforcement learning agents share the fundamental characteristic of learning from their experiences. This adaptability is what makes RL particularly powerful in dynamic and uncertain environments, such as robotics, gaming, and autonomous systems, where traditional programming methods may fall short.

The Architecture of Reinforcement Learning Agents

The architecture of reinforcement learning agents is composed of several critical components that facilitate their function. Each component plays a vital role in ensuring that the agent can learn effectively from its environment.

Components of a Reinforcement Learning Agent

A typical reinforcement learning agent operates through four primary components: the policy, the reward signal, the value function, and the environment itself.

- Policy: This is the strategy employed by the agent to determine the next action based on the current state. Policies can be deterministic or stochastic.

- Reward Signal: This measures the success of the agent’s actions, providing immediate feedback which can be positive or negative.

- Value Function: This estimation indicates the expected future rewards for each state, guiding the agent’s decision-making process.

- Environment: The context in which the agent operates, providing states and rewards based on the actions taken by the agent.

How Agents Interact with the Environment

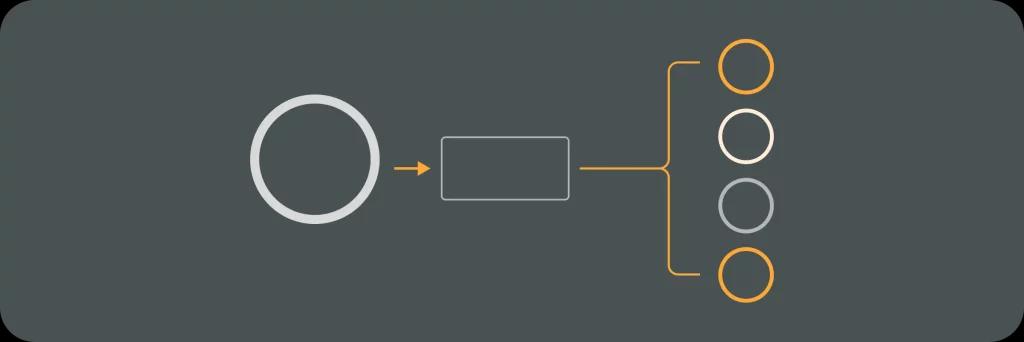

RL agents learn through a cycle of interaction with their environment, often referred to as the “agent-environment loop.” Initially, the agent observes the current state of the environment and selects an action based on its policy. After executing the action, the environment responds by transitioning to a new state and providing a reward.

This continuous feedback loop allows the agent to adjust its policy over time, learning from both successful and unsuccessful actions. This dynamic interplay ensures that the agent refines its understanding and maximizes long-term rewards.

Moreover, the complexity of the environment can significantly influence the learning process. In environments with high dimensionality or stochastic elements, agents may require more sophisticated strategies to navigate effectively. Techniques such as experience replay and target networks can be employed to stabilize learning and improve convergence rates. These methods allow agents to learn from past experiences more efficiently, thereby enhancing their ability to make informed decisions in future interactions.

Additionally, the exploration-exploitation trade-off is a crucial aspect of reinforcement learning. Agents must balance the need to explore new actions that may yield higher rewards with the need to exploit known actions that have previously provided favorable outcomes. This balance is often managed through various strategies, such as epsilon-greedy methods or Upper Confidence Bound (UCB) approaches, which help agents navigate the vast space of possible actions while still honing in on the most rewarding ones.

Types of Reinforcement Learning Agents

Reinforcement learning agents can be categorized into three primary types: value-based agents, policy-based agents, and model-based agents. Each has differing strategies and applications, determined primarily by their learning paradigms.

Value-Based Agents

Value-based agents learn to estimate the value of taking specific actions in given states. They optimize their policies by selecting actions that maximize expected future rewards. Techniques such as Q-learning are prominent examples of value-based approaches.

These agents maintain a value function representing the expected reward for each state-action pair. By continually updating this value function, agents can effectively learn the optimal policy to adopt. One of the key characteristics of value-based agents is their reliance on the Bellman equation, which provides a recursive relationship for calculating the value of states based on immediate rewards and the values of subsequent states. This foundational principle allows these agents to converge towards optimal policies over time, making them particularly effective in environments where the state and action spaces are manageable.

Policy-Based Agents

In contrast to value-based agents, policy-based agents parameterize their policies directly and optimize them using gradient ascent. These agents are particularly useful in environments with high-dimensional action spaces, where learning through action values can be inefficient.

Policy gradients play a crucial role in enabling these agents to learn the best action directly, focusing on maximizing expected rewards rather than learning a value function. As a result, policy-based methods tend to offer better performance in certain complex scenarios. Additionally, policy-based agents can handle stochastic policies, allowing them to explore a wider range of actions and adapt to changing environments more fluidly. This adaptability makes them suitable for applications in robotics and game playing, where the ability to navigate uncertain conditions is paramount.

Model-Based Agents

Model-based agents differ from the previous two types in that they build a model of the environment. By simulating the environment’s dynamics, these agents can generate potential action sequences and outcomes ahead of time, allowing for more strategic decision-making.

This approach can significantly enhance planning and can lead to more efficient learning. However, building an accurate model can be challenging, especially in complex environments with many variables. These agents often employ techniques such as Monte Carlo Tree Search (MCTS) to evaluate potential future states and make informed decisions based on simulated outcomes. Moreover, the ability to incorporate prior knowledge about the environment can further improve their performance, enabling them to make predictions that reduce the exploration needed to find optimal policies. This makes model-based agents particularly valuable in domains such as autonomous driving and complex game strategies, where understanding the environment’s dynamics is crucial for success.

The Learning Process of Reinforcement Agents

The learning process of reinforcement learning agents is intricate, balancing exploration and exploitation while continuously adapting to feedback. This dual process is essential for effective learning and achieving optimal performance.

Exploration and Exploitation

One of the fundamental dilemmas faced by reinforcement learning agents is finding the right balance between exploration (trying new actions) and exploitation (choosing the best-known actions). Exploration is crucial for discovering new strategies, while exploitation ensures the agent capitalizes on what it has already learned.

Strategies such as ε-greedy are implemented to manage this balance, allowing agents to explore a certain percentage of the time while exploiting their learned knowledge the rest of the time. Striking an appropriate balance is key to effective learning and long-term success.

Reward System in Reinforcement Learning

The reward system in reinforcement learning plays a critical role in shaping the agent’s learning experience. Rewards signal to the agent which actions were successful and which were not, guiding its policy updates.

The design of the reward structure needs careful consideration; rewards must be timely, informative, and aligned with the agent’s objectives. A well-defined reward system fosters efficient learning and can dramatically influence the agent’s performance.

Challenges in Reinforcement Learning

Despite its promise, reinforcement learning presents several significant challenges that researchers and practitioners must overcome to build proficient agents.

Overcoming the Curse of Dimensionality

As the state or action space increases in size, the complexity of learning grows exponentially—a phenomenon known as the curse of dimensionality. This makes it increasingly difficult for agents to sample enough experience to learn effectively.

To tackle this challenge, techniques such as function approximation and experience replay are employed. Function approximation helps generalize learning across similar states, while experience replay allows agents to learn from past experiences more efficiently.

Dealing with Partial Observability

In real-world scenarios, agents often face environments with incomplete information, known as partial observability. In such cases, the agent cannot fully observe the environment’s state, complicating the decision-making process.

To address this, agents can utilize techniques such as recurrent neural networks or belief states to maintain and update internal representations of the environment. This allows them to leverage past observations and make better-informed choices despite lacking complete information.

In conclusion, reinforcement learning agents are a crucial aspect of the machine learning landscape, functioning through unique learning processes and architectures to overcome various challenges. Understanding these agents can provide valuable insights into their potential applications across numerous fields.

Your DevOps Guide: Essential Reads for Teams of All Sizes

Elevate Your Business with Premier DevOps Solutions. Stay ahead in the fast-paced world of technology with our professional DevOps services. Subscribe to learn how we can transform your business operations, enhance efficiency, and drive innovation.