AWS Native Serverless Data & ML Pipelines Implementation

About the client

The client has been building a data platform for data science use cases. The goal was to ingest vast amounts of data from disparate sources, transform and enrich ingested data for unified representation, as well as provide the result to machine learning models for training and prediction.

The data flow comprises the following components:

– Ingestion Layer: The set of tools and pipelines that enable data acquisition from different sources, such as relational databases, REST APIs, semi-structured file formats, studies, and other documents.

– Data Lake: The centralized storage for all data assets with complete governance including inventory, provenance, access control, and audit.

– Batch Layer: The set of pipelines that cleanse, format, enrich, and label data for further ML model training.

– Feature Store: The single place to keep, curate, and serve features to machine learning (ML) models.

Challenge

The client had high costs for development, deployment, and, most importantly, operation of the data platform including Data Lake, Ingestion, and ML Pipelines. The pipelines were mostly running in EC2 instances, which led to the increased cost of operations and required a significant amount of time to deploy and test the pipelines in lower environments.

Solution

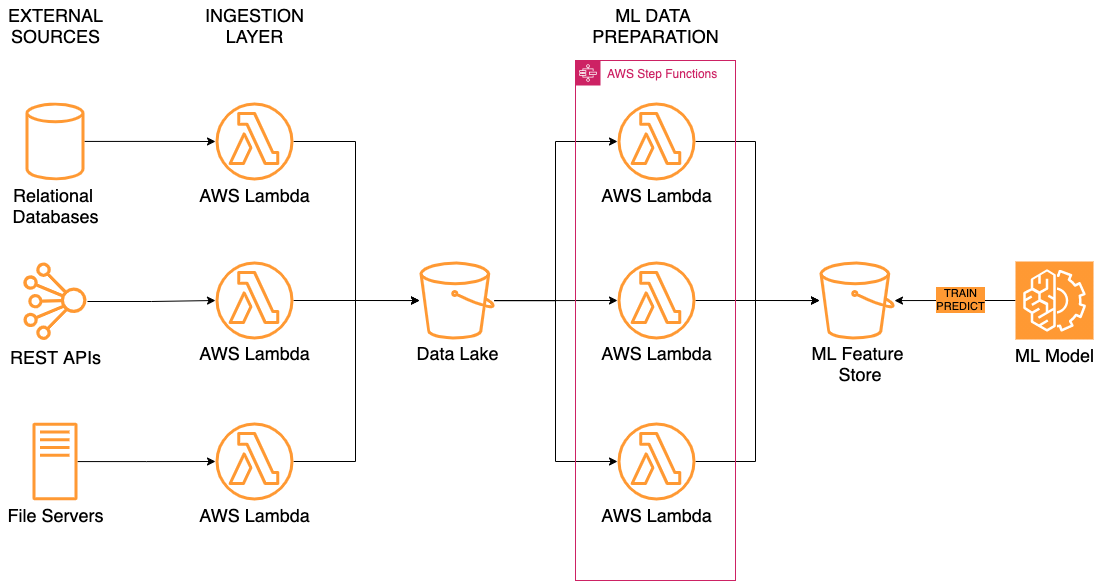

The proposed solution split the monolith ingestion service and ML data preparation pipelines into several small, autonomous serverless functions.

The first stage is the Ingestion Layer where custom-developed AWS lambda functions ingest data from different sources and drop it to the Data Lake as unstructured data. Then, the set of custom AWS Step Functions retrieve unstructured data from the Data Lake and transform it into a format that could be consumed by the ML model.

High-level solution diagram

Technologies

AWS Lambda, AWS Step Functions, Terraform, GitLab CI.

Result

The solution allowed to significantly reduce the cost and time spent on development and deployment. It was achieved by introducing infrastructure as code that quickly and seamlessly deploys infrastructure on multiple environments (Dev, Stage, Production). Besides, during the implementation, the team developed the set Lambda and Step function which triggers data processing by the event that excludes cases where EC2 instances are idling and created cost-efficient Data Lakes.

Ready to Unlock

Your Cloud Potential?