Load balancing is a crucial concept in the world of DevOps that plays a significant role in ensuring the smooth functioning of IT infrastructure. It involves the distribution of workload across multiple servers or computing resources to optimize performance, availability, and scalability. By evenly distributing incoming traffic, load balancing improves the overall efficiency and reliability of an IT system.

Understanding the Concept of Load Balancing

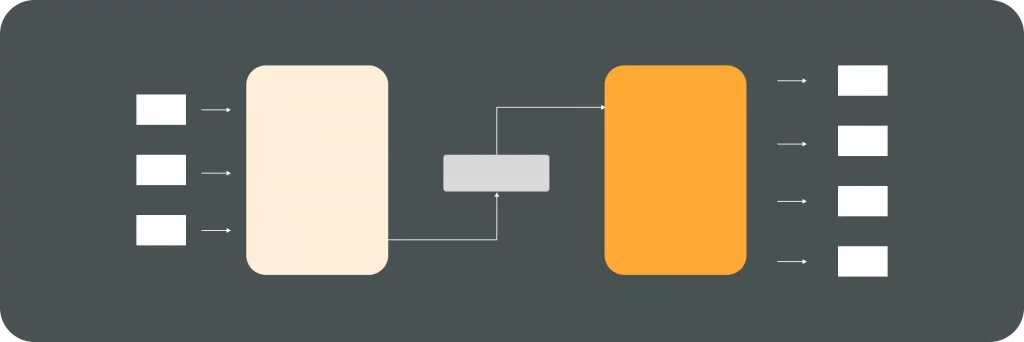

Load balancing, in simple terms, refers to the process of evenly distributing incoming requests, such as web traffic, across multiple servers or resources. This approach ensures that no single server or resource becomes overwhelmed with excessive load, which can hamper performance and lead to downtime. Instead, the workload is efficiently distributed, allowing servers to function optimally and deliver prompt responses to user requests.

Load balancing algorithms come in various types, each designed to address specific needs and scenarios. Some algorithms focus on evenly distributing requests based on server capacity, while others consider factors like response time or current server load. By selecting the appropriate algorithm, organizations can tailor their load balancing strategy to best suit their requirements.

Definition of Load Balancing

Load balancing is the technique of evenly distributing incoming requests across multiple servers or resources to optimize performance, availability, and scalability. It helps prevent bottlenecks and ensures that all servers are effectively utilized to handle incoming traffic.

Importance of Load Balancing in IT Infrastructure

Load balancing plays a crucial role in maintaining a robust and highly available IT infrastructure. By distributing workload evenly, it prevents any single server or resource from becoming overloaded, hence reducing the chances of downtime and ensuring a seamless user experience. It also enables easy scalability, making it possible to handle increasing traffic without compromising performance.

Moreover, load balancing enhances security by mitigating the impact of DDoS (Distributed Denial of Service) attacks. By spreading incoming traffic across multiple servers, the risk of overwhelming a single server and causing a service disruption due to a DDoS attack is significantly reduced. This proactive measure helps in safeguarding the IT infrastructure and ensuring uninterrupted service for users.

The Role of Load Balancing in DevOps

In the world of DevOps, load balancing plays a pivotal role in enhancing performance, availability, and scalability of applications and services. By implementing load balancing techniques, organizations can ensure that their systems can handle high volumes of traffic while maintaining optimal performance.

Load balancing operates as a traffic cop for incoming requests, ensuring that no single server is overwhelmed with traffic while others remain underutilized. This distribution of workload not only prevents server overload but also minimizes downtime by redirecting traffic away from servers that may be experiencing issues or maintenance.

Enhancing Performance and Availability

One of the primary benefits of load balancing in DevOps is the improvement in performance and availability of applications. By evenly distributing the workload, load balancers redirect requests to servers that have the available resources to handle them efficiently. This ensures that users experience fast response times and can access applications and services without interruptions.

Moreover, load balancers can perform health checks on servers to ensure they are operating optimally. If a server fails to pass the health check, the load balancer can automatically reroute traffic to healthy servers, maintaining high availability and reliability of the system.

Facilitating Scalability in DevOps

Scalability is a critical aspect of any DevOps environment, and load balancing plays a vital role in facilitating it. As traffic increases, load balancers can dynamically distribute workload across additional servers, ensuring that the system can handle the growing demand. This scalability ensures that applications and services remain accessible and perform optimally, even during peak traffic periods.

Additionally, load balancers can be configured to scale in and out based on predefined criteria such as CPU utilization or request rates. This automated scaling capability allows DevOps teams to adapt to changing traffic patterns and maintain consistent performance without manual intervention.

Types of Load Balancing Techniques

Load balancing techniques come in various forms to suit different requirements and configurations. Here are some commonly used techniques:

Round Robin Load Balancing

Round robin load balancing evenly distributes incoming requests across a group of servers in a circular manner. Each new request is directed to the next server in line, ensuring an equal distribution of workload. This technique is simple to implement and works well for systems with relatively homogeneous servers.

Round robin load balancing is like a carousel at a theme park, where each server is like a different horse on the ride. As requests come in, they take turns riding on each server, ensuring that no server is overburdened while others remain idle. This method is efficient for balancing the load across servers without requiring complex algorithms or configurations.

Least Connections Method

The least connections method takes into account the number of active connections to each server when distributing requests. It directs new requests to servers with the fewest active connections at that moment. This approach helps balance the workload more efficiently, as servers with lower connection counts can handle additional requests without becoming overwhelmed.

Imagine the least connections method as a traffic controller directing cars to different lanes based on how many vehicles are already in each lane. By sending new requests to servers with the least active connections, this technique optimizes resource utilization and prevents any single server from being overloaded. It’s like ensuring that each lane on a highway has a similar number of cars, preventing traffic jams and maintaining a smooth flow of vehicles.

IP Hash Load Balancing

IP hash load balancing uses a hash function to determine which server to direct a request to based on the client’s IP address. This method ensures that requests from the same client always go to the same server, providing session stickiness. This can be beneficial for scenarios where maintaining session state is crucial, such as e-commerce platforms or applications that require consistent user sessions.

Picture IP hash load balancing as a post office sorting mail based on postal codes. Just like how letters with the same postal code are routed to the same postal worker for efficient delivery, requests from a specific client’s IP address are consistently directed to the same server. This method is like having a designated mail carrier for each neighborhood, ensuring that important parcels reach their destination without getting lost in transit. Maintaining session stickiness through IP hash load balancing is essential for applications that rely on continuous user interactions and personalized experiences.

Load Balancing Tools in DevOps

Various load balancing tools are available in the DevOps landscape, offering different features and capabilities to suit specific needs. Let’s explore some of the commonly used load balancing software:

Overview of Load Balancing Software

Load balancing software, such as Nginx, Apache HTTP Server, and HAProxy, provides the necessary functionality to distribute incoming requests across multiple servers. These tools typically offer advanced features like session persistence, health monitoring, and SSL termination, enabling organizations to build highly available and performant systems.

Additionally, some load balancing tools like AWS Elastic Load Balancing (ELB) and Microsoft Azure Load Balancer are cloud-native solutions that seamlessly integrate with cloud environments. These tools offer auto-scaling capabilities, dynamic routing, and integration with other cloud services, making them ideal for organizations leveraging cloud infrastructure.

Choosing the Right Load Balancing Tool

When selecting a load balancing tool in a DevOps environment, multiple factors come into play. Considerations include the anticipated traffic load, the desired level of scalability, the need for advanced features like SSL termination, and the compatibility with existing infrastructure. It’s essential to carefully evaluate each tool’s capabilities to make an informed decision that aligns with the organization’s requirements and goals.

Furthermore, some load balancing tools like F5 BIG-IP offer comprehensive security features such as Web Application Firewall (WAF) capabilities, DDoS protection, and bot mitigation. These security-focused tools are crucial for safeguarding applications and infrastructure from cyber threats, ensuring a secure and reliable user experience.

Challenges in Implementing Load Balancing

While load balancing offers significant benefits, there are challenges that organizations may encounter when implementing it in their DevOps environment. Here are a couple of common challenges:

Dealing with Dynamic Content

Handling dynamic content, such as user sessions and real-time updates, can be challenging when load balancing is involved. Individual servers must maintain session state or coordinate data updates to ensure consistency across the system. Implementing appropriate strategies and technologies, such as session clustering or using shared databases, can help overcome these challenges.

Handling SSL and Sticky Sessions

Ensuring proper handling of SSL connections and session stickiness is crucial in certain applications. SSL termination at the load balancer enables efficient processing of secure connections, while sticky sessions ensure that requests from the same client are consistently directed to the server that maintains their session state. Configuring load balancers correctly to support these requirements is essential for maintaining application functionality and security.

In conclusion, load balancing is a vital component of DevOps that enhances system performance, availability, and scalability. With various load balancing techniques and tools available, organizations can distribute workload efficiently across multiple servers or resources. However, it’s important to consider and address challenges related to dynamic content and SSL handling to ensure the successful implementation of load balancing in a DevOps environment. By implementing load balancing effectively, organizations can achieve highly available, performant systems that meet the demands of modern IT infrastructure.