Variational Autoencoders (VAEs) are a type of machine learning model that have gained significant attention in recent years. They are particularly useful for tasks such as data generation, image processing, and natural language processing. In this article, we will explore the basics of VAEs, their technical aspects, functionality, applications, as well as their limitations and challenges.

Understanding the Basics of Variational Autoencoders

Defining Variational Autoencoders

A variational autoencoder is a type of generative model that combines elements of both an autoencoder and variational inference. It is designed to learn a compressed representation of input data, known as the latent space, and generate new data similar to the training examples.

The autoencoder component consists of an encoder network that maps the input data to the latent space and a corresponding decoder network that reconstructs the input data from the latent space representation. The variational aspect comes into play by learning a probabilistic distribution over the latent space, which allows for the generation of diverse outputs.

One key advantage of variational autoencoders is their ability to perform inference and generation simultaneously. This means that they can not only compress input data into a latent space representation but also generate new data points by sampling from the learned distribution. This dual functionality sets VAEs apart from traditional autoencoders and makes them powerful tools for tasks such as image generation and data augmentation.

The Importance of VAEs in Machine Learning

VAEs have become essential tools in machine learning due to their ability to learn meaningful representations of complex data. Unlike traditional autoencoders, VAEs introduce probabilistic modeling, which enables them to generate new data samples by sampling from the learned distribution in the latent space.

Furthermore, VAEs are capable of disentangling the underlying factors of variation in the data. This means that they can capture and manipulate specific attributes or features during data generation or image editing, which makes them highly valuable in various domains such as computer vision and natural language processing.

Another significant advantage of VAEs is their ability to interpolate in the latent space. By smoothly traversing the latent space between two data points, VAEs can generate intermediate outputs that blend characteristics of the original data points. This interpolation capability is particularly useful in tasks like image morphing and style transfer, where smooth transitions between different visual styles are desired.

The Technical Aspects of Variational Autoencoders

The Architecture of VAEs

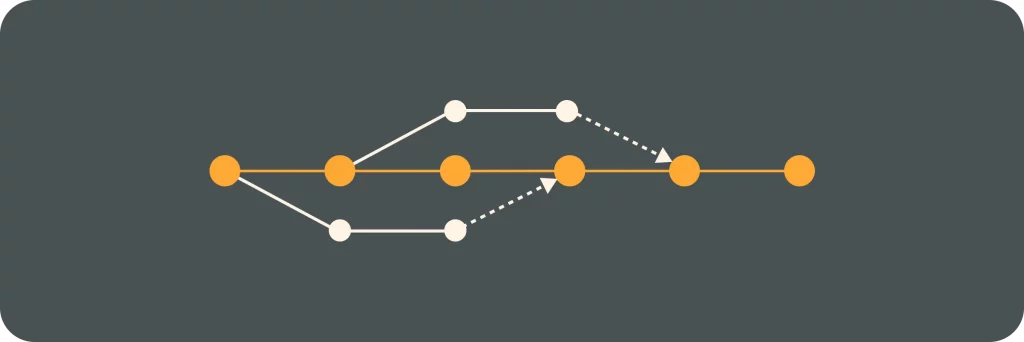

The architecture of a VAE consists of three main components: the encoder network, the decoder network, and the reparameterization layer.

The encoder network takes the input data and maps it to a latent space representation. It typically consists of several layers of neural networks that gradually reduce the dimensionality of the data until it reaches the desired latent space size.

The decoder network, on the other hand, takes a latent space representation and reconstructs it into the original input data. It mirrors the encoder network in structure but reverses the dimensionality reduction process.

The reparameterization layer is a crucial part of VAEs as it introduces stochasticity into the model. It takes the latent space representation and samples from a multivariate distribution to generate diverse outputs.

When designing the architecture of a VAE, there are several considerations to keep in mind. One important aspect is the choice of activation functions for the encoder and decoder networks. Common choices include the rectified linear unit (ReLU) and the sigmoid function. These activation functions help introduce non-linearity into the model, allowing it to capture complex patterns in the data.

Another consideration is the number of layers and the size of each layer in the encoder and decoder networks. Adding more layers can potentially increase the model’s capacity to learn intricate representations, but it also increases the risk of overfitting. Finding the right balance between model complexity and generalization is crucial for achieving good performance.

The Mathematical Principles Behind VAEs

To understand the mathematical principles behind VAEs, we need to delve into the concept of variational inference and the need for a latent space.

Variational inference involves approximating a complex probability distribution with a simpler one. In the case of VAEs, the encoder network learns to approximate the true posterior distribution over the latent space given the input data.

The latent space is represented by a multivariate Gaussian distribution, with the mean and standard deviation learned by the encoder network. During training, the reparameterization layer samples from this distribution to generate diverse outputs for the decoder network to reconstruct.

One of the challenges in training VAEs is the trade-off between reconstruction accuracy and the smoothness of the latent space. If the latent space is too smooth, the generated samples may lack diversity. On the other hand, if the latent space is too sparse, the model may struggle to reconstruct the input data accurately. Balancing these two aspects is crucial for achieving high-quality results.

Furthermore, VAEs can be extended to incorporate additional regularization techniques, such as the use of a Kullback-Leibler (KL) divergence term in the loss function. This term encourages the learned latent space to be close to a prior distribution, such as a standard Gaussian. By doing so, the model can learn more meaningful and disentangled representations of the input data.

The Functionality of Variational Autoencoders

How VAEs Work

The functionality of Variational Autoencoders (VAEs) can be summarized into two main steps: encoding and decoding.

During the encoding step, the input data is passed through the encoder network, which maps it to a lower-dimensional representation in the latent space. This lower-dimensional representation is crucial as it captures the essential features of the input data while reducing its dimensionality. By doing so, the encoder network facilitates efficient storage and manipulation of data in a compressed form, enabling better generalization and faster processing.

The encoder network simultaneously learns the parameters of the multivariate Gaussian distribution that represents the latent space. This distribution plays a vital role in the generation of new data samples during the decoding phase. By learning the parameters of this distribution, the VAE can effectively sample latent space vectors that correspond to meaningful data points, ensuring the quality of the generated outputs.

During the decoding step, the decoder network takes the latent space representation and reconstructs it back into the original data. The decoder network is responsible for transforming the compressed latent space representation into a format that closely resembles the input data. Through this reconstruction process, the decoder network aims to minimize the reconstruction loss, ensuring that the generated outputs are faithful representations of the original data.

The Role of VAEs in Data Generation

One of the most significant advantages of VAEs is their ability to generate new data samples. This innovative capability is made possible by sampling from the learned latent space distribution and passing the samples through the decoder network. By leveraging the latent space representation of the data, VAEs can produce novel data points that exhibit similar characteristics to the training data.

VAEs have been successfully applied in various domains, such as generating realistic images, generating text, and even generating music compositions. The versatility of VAEs in data generation tasks has revolutionized the field of artificial intelligence and machine learning by enabling the creation of synthetic data that closely resembles real-world datasets. This breakthrough has opened up new possibilities for data augmentation, anomaly detection, and creative applications across diverse industries.

The Applications of Variational Autoencoders

VAEs in Image Processing

In image processing, VAEs have proven to be highly effective in tasks such as image denoising, image inpainting, and image super-resolution.

By learning the underlying structure of the input data, VAEs can remove noise from images, fill in missing parts, and enhance the resolution of low-quality images. This makes them valuable tools in various industries, including healthcare, where images often suffer from noise or low resolution.

VAEs in Natural Language Processing

VAEs are also widely used in natural language processing tasks, such as text generation and text classification.

They can be trained on a large corpus of text data and then used to generate coherent and contextually relevant text sequences. This has applications in various areas, including virtual assistants, chatbots, and language translation systems.

The Limitations and Challenges of Variational Autoencoders

Understanding the Limitations of VAEs

While VAEs offer many advantages, they also have limitations that need to be considered. One limitation is the trade-off between the reconstruction quality and the diversity of generated samples. As the model strives to reconstruct the input data accurately, it may sacrifice diversity in the generated outputs.

Another limitation is the difficulty of training VAEs on complex datasets. The training process can be slow and requires careful tuning of hyperparameters to ensure convergence.

Overcoming the Challenges in Implementing VAEs

To overcome the challenges of VAEs, researchers and practitioners have proposed various techniques. These include incorporating additional constraints, such as adversarial training, to improve the diversity of the generated samples while maintaining reconstruction quality.

Furthermore, advancements in hardware, such as the availability of specialized graphics processing units (GPUs), have significantly reduced the training time of VAEs on large and complex datasets.

Conclusion

Variational Autoencoders (VAEs) have emerged as powerful tools in machine learning, capable of learning meaningful representations of complex data and generating new samples. They have found applications in diverse domains such as image processing and natural language processing. While they have limitations and challenges, ongoing research and advancements continue to push the boundaries of VAEs, making them an essential component in the development of intelligent systems.