Deploying AI Models Securely with LLMOps

The rapid advancement of artificial intelligence (AI) technologies is transforming industries worldwide. As organizations explore the potential of AI models, secure deployment becomes essential. This article delves into the concepts surrounding AI models and introduces LLMOps—a framework that facilitates the secure and efficient deployment of these models.

Understanding the Basics of AI Models

To grasp the significance of LLMOps, we must first understand the core elements of AI models. These models are mathematical constructs designed to predict outcomes based on input data. They learn from historical data, which allows them to make informed decisions or predictions in real-time applications. The training process involves feeding the model vast amounts of data, enabling it to recognize patterns and correlations that might not be immediately evident to human analysts. This capability is what makes AI models so powerful in various domains.

Defining AI Models

AI models can be classified into various categories, including supervised learning, unsupervised learning, reinforcement learning, and deep learning. Each category employs different methodologies and has unique applications. For instance, supervised learning utilizes labeled datasets to train models, while unsupervised learning finds patterns in unlabeled data. Deep learning, a subset of machine learning, employs neural networks with many layers to analyze complex data structures, making it particularly effective for tasks like image and speech recognition. Reinforcement learning, on the other hand, involves training models through a system of rewards and penalties, which is especially useful in developing autonomous systems such as self-driving cars.

Importance of AI Models in Today’s World

The implementation of AI models offers considerable advantages, including enhanced efficiency, data-driven decision-making, and predictive analytics. Industries such as healthcare, finance, and marketing harness AI models to gain insights, automate processes, and deliver personalized experiences. For example, in healthcare, AI models can analyze patient data to predict potential health risks, enabling proactive interventions. In finance, algorithms assess credit risk and detect fraudulent transactions in real-time, safeguarding both institutions and consumers. Furthermore, marketing teams leverage AI to analyze consumer behavior, tailoring campaigns to individual preferences, thereby increasing engagement and conversion rates. The versatility of AI models continues to expand, driving innovation across various sectors and reshaping the way businesses operate.

Introduction to LLMOps

With the increasing complexity of AI models, managing their lifecycle from development to deployment has become a challenge. This is where LLMOps (Large Language Model Operations) comes into play. It encompasses a set of practices and tools designed to streamline the deployment, monitoring, and management of language models, ensuring that they are operated effectively and securely.

What is LLMOps?

LLMOps is a structured approach to managing AI models, particularly large language models. It focuses on enhancing collaboration between data scientists, engineers, and other stakeholders involved in the AI deployment process. By providing a clear framework, LLMOps helps organizations utilize their AI models better while minimizing risks associated with deployment. This structured methodology not only improves efficiency but also fosters a culture of continuous improvement, where feedback loops are established to refine models based on real-world performance and user interactions.

Key Features of LLMOps

LLMOps incorporates several key features that facilitate secure and efficient deployment:

- Version Control: LLMOps allows teams to manage different versions of their models, ensuring that updates are traceable and reversible.

- Monitoring and Logging: Comprehensive monitoring capabilities enable organizations to track model performance and detect anomalies promptly.

- Collaboration Tools: Enhanced communication channels among stakeholders promote transparency and team synchronization.

- Compliance Management: Tools to help maintain compliance with industry regulations and security policies while deploying AI solutions.

In addition to these features, LLMOps emphasizes the importance of automated testing and validation processes. By integrating automated testing into the deployment pipeline, teams can ensure that any new model versions meet predefined performance criteria before they go live. This proactive approach not only reduces the likelihood of errors but also accelerates the deployment process, allowing organizations to respond to market changes more swiftly. Furthermore, LLMOps encourages the use of feedback mechanisms to gather insights from end-users, which can be invaluable for ongoing model refinement and enhancement.

Another critical aspect of LLMOps is its focus on scalability. As organizations grow and their AI needs evolve, LLMOps provides the necessary tools and frameworks to scale model deployment efficiently. This includes the ability to manage multiple models across various environments, ensuring that each model operates optimally regardless of the underlying infrastructure. By leveraging cloud-based solutions and containerization technologies, LLMOps enables teams to deploy models in a flexible manner, adapting to changing demands without compromising on performance or security.

The Intersection of AI and LLMOps

The relationship between AI and LLMOps is symbiotic. While AI models provide the intelligence necessary for automation and insights, LLMOps offers the infrastructure and governance to deploy these models securely.

Why Use LLMOps for AI Models?

As organizations scale their AI initiatives, the deployment process can become convoluted. Here’s why adopting LLMOps can be beneficial:

- Efficiency in Deployment: LLMOps frameworks enable rapid deployment of models without sacrificing quality.

- Increased Security: By integrating security protocols into the deployment pipelines, LLMOps minimizes vulnerabilities throughout the model’s lifecycle.

- Operational Consistency: Standardized practices ensure that deployments follow consistent guidelines, reducing the chances of errors.

The Role of LLMOps in AI Model Deployment

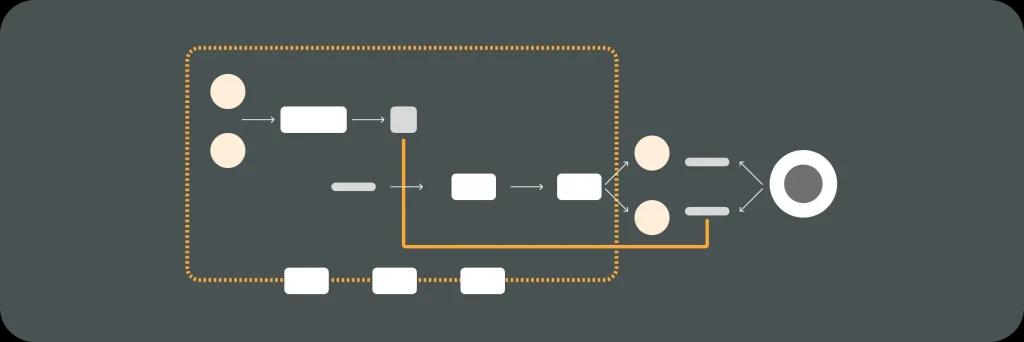

LLMOps serves as a bridge connecting the model training phase with the deployment phase. It provides a standardized approach that encompasses testing, validation, and continuous monitoring. This ensures that organizations can not only deploy models but also validate their functionality and monitor their operational health post-deployment.

Moreover, LLMOps facilitates collaboration across teams, allowing data scientists, engineers, and stakeholders to work in tandem. By creating a common language and set of practices, LLMOps fosters an environment where feedback loops are shorter, and improvements can be implemented swiftly. This collaborative spirit is essential in an era where AI models must adapt to rapidly changing data and business requirements, ensuring that organizations remain competitive and responsive to market dynamics.

In addition to enhancing collaboration, LLMOps also plays a crucial role in compliance and ethical considerations surrounding AI deployment. With increasing scrutiny on data privacy and algorithmic bias, LLMOps frameworks often incorporate guidelines that help organizations navigate these challenges. By embedding ethical considerations into the deployment process, LLMOps not only safeguards the integrity of the AI models but also builds trust with users and stakeholders, which is vital for long-term success in AI initiatives.

Ensuring Security in AI Deployment with LLMOps

Security is paramount in AI deployments, especially given the sensitive nature of data that AI models often handle. LLMOps plays a crucial role in enhancing this security, protecting both the models and the data they utilize.

Importance of Secure AI Deployment

Unsecured AI deployments can lead to significant risks, including data breaches and misuse of AI functionalities. Ensuring security measures are in place protects sensitive data and maintains the integrity of AI applications. The repercussions of a security lapse can be far-reaching, affecting not only the organization’s reputation but also the trust of users who rely on these AI systems for critical services. As AI continues to evolve and integrate into various sectors, the need for comprehensive security protocols becomes even more pressing, necessitating a proactive approach to safeguard against emerging threats.

How LLMOps Enhances Security in AI Deployment

LLMOps enhances security in several ways:

- Data Encryption: Sensitive data used in AI models is encrypted to prevent unauthorized access.

- Access Controls: Robust access controls ensure that only authorized personnel can interact with AI systems.

- Audit Trails: Continuous logging of model interactions allows organizations to track usage and identify potential security issues.

In addition to these measures, LLMOps also incorporates advanced threat detection mechanisms that leverage machine learning to identify unusual patterns of behavior within AI systems. By analyzing interactions in real-time, these systems can flag suspicious activities, allowing for immediate investigation and response. Furthermore, regular security assessments and updates are essential components of LLMOps, ensuring that the deployed models are resilient against new vulnerabilities and that any potential weaknesses are addressed promptly. This proactive stance not only fortifies the AI infrastructure but also instills confidence among stakeholders that their data is being handled with the utmost care and security.

Steps to Deploy AI Models Securely with LLMOps

Deploying AI models securely involves a series of strategic steps to ensure their integrity and performance.

Preparing Your AI Model for Deployment

Before deploying an AI model, it is crucial to conduct thorough testing to evaluate its accuracy and performance. This phase should include unit testing, integration testing, and performance benchmarking. Additionally, consider documenting the model’s behavior and potential limitations to ease future troubleshooting.

Deploying Your AI Model Using LLMOps

After preparation, the actual deployment can begin. Utilizing an LLMOps framework can significantly enhance this process by:

- Automating deployment workflows, reducing manual errors.

- Integrating monitoring tools to track the model’s performance and gather insights.

- Implementing rollback procedures in case of deployment failures, enabling quick recovery.

By following these steps and leveraging LLMOps, organizations can ensure that their AI models are deployed securely, efficiently, and effectively, paving the way for successful AI integration.

Your DevOps Guide: Essential Reads for Teams of All Sizes

Elevate Your Business with Premier DevOps Solutions. Stay ahead in the fast-paced world of technology with our professional DevOps services. Subscribe to learn how we can transform your business operations, enhance efficiency, and drive innovation.