Kubernetes has become the go-to solution for managing containerized applications at scale. With its ability to automate deployment, scaling, and management of applications, Kubernetes provides a powerful platform for developers. However, optimizing your Kubernetes deployment is crucial to ensure that your applications run efficiently and effectively. In this article, we will explore the key steps to optimize your Kubernetes deployment and achieve peak performance.

Understanding Kubernetes Deployment

Kubernetes deployment is a process that involves running and managing containerized applications on a cluster of machines. To fully optimize your deployment, it is important to have a clear understanding of Kubernetes and its basic concepts.

The Basics of Kubernetes

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of applications. It uses a declarative configuration to define how the applications should run and provides a set of powerful tools to manage and monitor these applications.

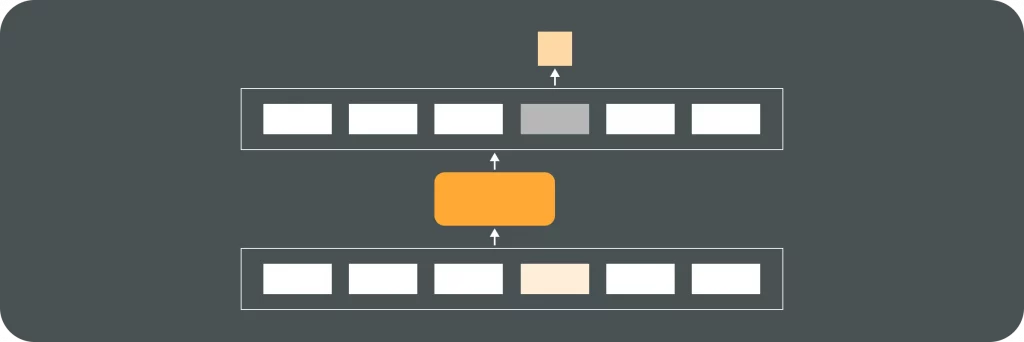

At the core of Kubernetes deployment is the concept of a cluster. A cluster consists of multiple machines, called nodes, that work together to run and manage containerized applications. Each node runs a copy of the Kubernetes software stack, enabling it to participate in the cluster and perform various tasks.

Key Components of Kubernetes Deployment

There are several key components in a Kubernetes deployment that play a vital role in optimizing the performance of your applications.

Firstly, the control plane is responsible for managing the overall state of the cluster. It includes components like the API server, etcd, scheduler, and controller manager. Proper configuration and optimization of these components are crucial for achieving optimal performance.

Secondly, worker nodes are responsible for running the containerized applications. These nodes have the necessary resources, such as CPU and memory, to execute your applications. Ensuring that each node has the appropriate resources and is properly configured can greatly enhance the performance of your deployment.

Now, let’s dive deeper into the control plane and its components. The API server acts as the gateway for all administrative operations and external communication with the cluster. It exposes the Kubernetes API, which allows users to interact with the cluster and manage resources.

Etcd is a distributed key-value store that serves as the cluster’s database. It stores the configuration data and the current state of the cluster, ensuring consistency and reliability. The scheduler is responsible for assigning workloads to worker nodes based on resource availability and constraints.

The controller manager is a collection of controllers that monitor the state of the cluster and take corrective actions if necessary. These controllers ensure that the desired state of the cluster is maintained, handling tasks such as scaling applications, managing storage, and handling network policies.

On the other hand, worker nodes are the workhorses of the cluster. They run the containerized applications and provide the necessary resources for their execution. Each worker node runs a container runtime, such as Docker or containerd, which manages the lifecycle of containers.

Additionally, worker nodes have a kubelet, which is responsible for communicating with the control plane and executing tasks assigned to the node. The kube-proxy, another component on the worker node, handles network routing and load balancing for the containers.

By understanding the intricacies of the control plane and worker nodes, you can fine-tune your Kubernetes deployment to achieve optimal performance and scalability. Properly configuring and optimizing these components will ensure that your applications run smoothly and efficiently on the cluster.

The Importance of Kubernetes Optimization

Optimizing your Kubernetes deployment is essential for several reasons. Firstly, optimization ensures that your applications run efficiently, leading to improved performance and reduced costs. By making efficient use of resources and optimizing configurations, you can minimize resource wastage and avoid overspending on infrastructure.

But what exactly does Kubernetes optimization entail? Let’s delve deeper into the topic and explore the benefits it brings.

Benefits of Optimized Kubernetes Deployment

Optimizing your Kubernetes deployment offers numerous benefits. Firstly, it improves scalability, allowing you to handle larger workloads and accommodate growing demands. By optimizing resource allocations and scaling strategies, you can ensure that your applications can scale seamlessly without any service disruptions.

Imagine a scenario where your application suddenly experiences a surge in traffic. Without proper optimization, your Kubernetes deployment might struggle to handle the increased load, resulting in slow response times and even service failures. However, by optimizing your deployment, you can proactively prepare for such situations and ensure that your applications continue to perform at their best, regardless of the workload.

Secondly, optimization enhances the reliability and fault tolerance of your deployment. By optimizing the configuration of components like the load balancer and the container runtime, you can minimize the risk of failures and improve the overall resilience of your applications.

Think of your Kubernetes deployment as a well-oiled machine. Each component plays a crucial role in ensuring the smooth operation of your applications. However, if any of these components are not optimized, they can become bottlenecks or single points of failure. By optimizing the configuration of these components, you can strengthen the foundation of your deployment and reduce the chances of unexpected failures.

Potential Issues with Unoptimized Deployments

Unoptimized Kubernetes deployments can lead to various issues that affect the performance of your applications. Issues such as high resource utilization, poor scalability, and frequent failures can hinder the smooth operation of your applications and impact the user experience. By optimizing your deployment, you can address these issues and ensure a reliable and efficient environment for your applications.

Let’s take a closer look at some of these potential issues:

- High resource utilization: Without optimization, your Kubernetes deployment might consume more resources than necessary, leading to increased costs and potential resource shortages. By optimizing resource allocations, you can ensure that your applications use resources efficiently, minimizing waste and maximizing cost-effectiveness.

- Poor scalability: In an unoptimized deployment, scaling your applications to handle increased workloads can be a challenging task. Without proper optimization, you might encounter scalability issues such as slow scaling times or uneven distribution of resources. By optimizing your deployment, you can implement effective scaling strategies that allow your applications to grow seamlessly as demand increases.

- Frequent failures: Unoptimized deployments are more prone to failures, which can disrupt the availability of your applications and negatively impact user experience. By optimizing the configuration of critical components, you can reduce the chances of failures and create a more robust and reliable environment for your applications.

By addressing these potential issues through optimization, you can ensure that your Kubernetes deployment operates at its full potential, providing a stable and high-performing platform for your applications.

Steps to Optimize Your Kubernetes Deployment

Now that we understand the importance of optimizing a Kubernetes deployment, let’s explore the key steps to achieve optimal performance.

Optimizing a Kubernetes deployment involves a series of strategic decisions and meticulous planning to ensure that your applications run smoothly and efficiently. By following best practices and implementing optimization techniques, you can maximize resource utilization and enhance the overall performance of your Kubernetes cluster.

Planning Your Deployment Strategy

Begin by carefully planning your deployment strategy. Define your application requirements, evaluate the resource needs, and determine the optimal configuration for your deployment.

When planning your deployment strategy, consider factors such as scalability, fault tolerance, and performance requirements. By conducting a thorough analysis of your application’s needs, you can design a deployment strategy that is tailored to meet your specific goals and objectives.

Furthermore, take into account considerations such as service discovery, load balancing, and monitoring capabilities to ensure that your deployment strategy is robust and resilient in the face of varying workloads and demands.

Implementing Best Practices for Kubernetes Optimization

Next, implement best practices for Kubernetes optimization. This includes fine-tuning resource allocations, optimizing container images, and enabling resource quotas. Deploying your applications using best practices ensures that they run efficiently and perform optimally.

Moreover, consider incorporating automated scaling mechanisms, such as Horizontal Pod Autoscaling (HPA), to dynamically adjust the number of running pods based on resource utilization. This proactive approach to resource management helps optimize performance and maintain stability during peak traffic periods.

Additionally, consider implementing security measures such as RBAC (Role-Based Access Control), network policies, and pod security policies. These safeguards protect your applications and prevent unauthorized access or malicious activities. By prioritizing security in your deployment strategy, you can safeguard sensitive data and ensure the integrity of your Kubernetes environment.

Advanced Optimization Techniques

While implementing best practices is essential, you can further enhance the performance of your Kubernetes deployment by leveraging advanced optimization techniques.

Utilizing Kubernetes Native Tools for Optimization

Kubernetes provides several built-in tools that can help optimize your deployment. Tools such as Horizontal Pod Autoscaler and Cluster Autoscaler automatically scale your applications and cluster based on resource usage. By leveraging these tools, you can ensure that your applications are always running at the appropriate scale, optimizing both performance and cost efficiency.

Third-Party Tools for Enhanced Optimization

In addition to Kubernetes native tools, there are several third-party tools available that can further enhance the optimization of your deployment. These tools provide advanced monitoring, logging, and performance analysis capabilities, allowing you to gain deeper insights into your applications and identify areas for improvement.

Monitoring Your Kubernetes Deployment

Regularly monitoring your Kubernetes deployment is crucial to ensure that it continues to perform optimally and meet your application’s needs.

Key Metrics to Monitor

Monitor key metrics such as CPU and memory usage, network traffic, and application-specific metrics. By monitoring these metrics, you can identify performance bottlenecks and take appropriate actions to address them.

Responding to Deployment Issues

Inevitably, deployment issues may arise. When they do, respond promptly by diagnosing the root cause and implementing the necessary fixes. Proactive monitoring and rapid response to issues ensure that your applications remain highly available and performant.

In conclusion, optimizing your Kubernetes deployment is crucial for achieving peak performance and ensuring that your applications operate efficiently. By following the steps outlined in this article, including understanding Kubernetes deployment, implementing best practices, leveraging advanced optimization techniques, and monitoring your deployment, you can achieve an optimized Kubernetes deployment that maximizes resource utilization, enhances scalability, and improves the reliability of your applications.