LLMOps vs FMOps: What’s the Difference?

As the field of artificial intelligence (AI) continues to evolve, operational strategies for machine learning projects are increasingly scrutinized. Two prominent paradigms in this context are Large Language Model Operations (LLMOps) and Fine-tuned Model Operations (FMOps). Understanding these two approaches not only helps organizations select the right tools but also enhances their capabilities in deploying AI solutions effectively.

Understanding the Basics of LLMOps and FMOps

Before delving into the complexities of LLMOps and FMOps, it’s essential to establish a clear definition of each term. These operational frameworks address different aspects of machine learning deployment, specifically tailored to varying needs.

Defining LLMOps

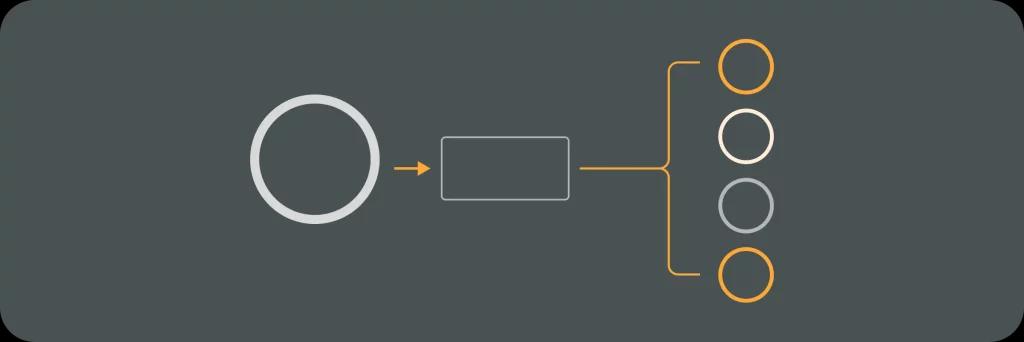

LLMOps refers to the specialized operations related to the management and deployment of large language models. This encompasses all the tasks and processes necessary to train, validate, and deploy these models effectively. The overarching aim of LLMOps is to streamline workflows, minimize errors, and ensure that large-scale language models deliver consistent results.

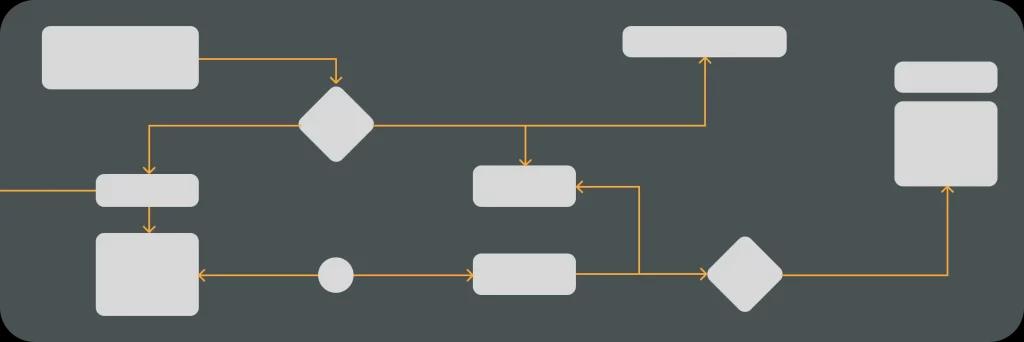

Organizations leveraging LLMOps can expect to manage vast amounts of data and computational resources more efficiently, usually involving sophisticated pipelines that integrate data ingestion, training, and monitoring. The implementation of LLMOps often requires collaboration across various teams, including data scientists, software engineers, and system administrators, to ensure that the models are not only accurate but also scalable and maintainable. This collaborative approach fosters an environment where continuous learning and adaptation are prioritized, allowing organizations to stay ahead in the rapidly evolving landscape of AI technology.

Defining FMOps

On the other hand, FMOps focuses on fine-tuned models. These are models that have been pre-trained on a large dataset and subsequently fine-tuned for specific tasks or domains. FMOps primarily revolves around optimizing these fine-tuned models to ensure they perform exceptionally well in their designated applications.

The FMOps framework is designed to address nuances in operations, enabling teams to concentrate on aspects such as hyperparameter tuning, evaluation, and ongoing model optimization. This process often involves rigorous testing and validation phases, where models are assessed against various benchmarks to ensure they meet the desired performance criteria. Additionally, FMOps emphasizes the importance of maintaining model integrity over time, which includes implementing monitoring systems that can detect drift in model performance and trigger retraining processes as necessary. By focusing on these critical operational aspects, organizations can maximize the utility of their fine-tuned models, ensuring they remain relevant and effective in changing environments.

The Core Principles of LLMOps

LLMOps is grounded in several core principles that govern its framework. Understanding these principles can provide insight into how organizations can leverage LLMOps to achieve better operational efficiency.

The Role of Data in LLMOps

Data serves as the backbone of LLMOps. In this context, the quality and volume of data significantly influence the performance of language models. Consequently, a robust data pipeline is necessary for continuously feeding the model with diverse datasets that refine its capabilities.

Ensuring that data is accurate, well-labeled, and representative of real-world scenarios is fundamental for successful LLMOps deployments. Moreover, the incorporation of feedback loops where user interactions and model outputs are analyzed can further enhance data quality. This iterative process not only helps in identifying gaps in the dataset but also aids in understanding user needs, which can lead to more tailored and effective language model outputs.

Key Components of LLMOps

- Data Management: Encompasses data collection, cleaning, transformation, and storage.

- Model Training: Involves the processes used to develop the language model, including choosing architectures and optimizing parameters.

- Monitoring and Maintenance: Continuous assessment of model performance with provisions for updates and improvements.

In addition to these components, collaboration among cross-functional teams is essential for the success of LLMOps. Data scientists, engineers, and domain experts must work closely to ensure that the model not only performs well technically but also meets the specific needs of the business or application. This collaborative approach fosters a culture of shared responsibility and innovation, allowing for the rapid iteration of ideas and solutions that can significantly enhance the model’s effectiveness.

Furthermore, the ethical considerations surrounding LLMOps cannot be overlooked. As organizations deploy language models that interact with users, they must be vigilant about biases in data and outputs. Implementing fairness checks and transparency measures is crucial to ensure that the models operate within ethical boundaries and promote trust among users. This proactive stance on ethics will not only safeguard the organization but also contribute to a more equitable use of AI technologies in society.

The Core Principles of FMOps

FMOps embodies principles that focus on fine-tuning pre-trained models, allowing for high adaptability within various contexts and applications. This adaptability is crucial in an era where data is constantly evolving, and the demand for personalized solutions is at an all-time high. By leveraging FMOps, organizations can ensure that their models remain relevant and effective, catering to the unique needs of their users while maintaining a competitive edge in their respective industries.

The Importance of Feedback in FMOps

Feedback mechanisms are vital in FMOps, as they help in refining model performance over time. Through collecting user feedback and performance metrics, organizations can iterate on their models, leading to enhanced accuracy and relevance. This process not only improves the model’s performance but also fosters a sense of collaboration between the users and the developers, creating a more user-centered approach to model development.

Feedback loops ensure that the model adapts to changing data and user expectations, forming a dynamic learning environment that promotes continuous improvement. By integrating real-time feedback, organizations can quickly identify areas for enhancement and pivot their strategies accordingly, thereby minimizing the risk of obsolescence in a fast-paced digital landscape. This responsiveness is essential for maintaining user trust and satisfaction, as it demonstrates a commitment to delivering high-quality, tailored solutions that evolve alongside user needs.

Key Components of FMOps

- Fine-Tuning Strategies: Focused on adjusting model parameters to perform optimally on specific tasks or datasets. These strategies often involve techniques such as transfer learning, where knowledge gained from one task is applied to another, thereby accelerating the fine-tuning process and improving outcomes.

- Evaluation Metrics: Identification of key performance indicators that may vary depending on the use case. Organizations must carefully select these metrics to ensure they align with their goals, whether that be precision, recall, or user engagement, allowing for a comprehensive assessment of model effectiveness.

- Iterative Improvements: Regular updates and refinements based on feedback and emerging data trends. This iterative process not only enhances the model’s capabilities but also encourages a culture of innovation within teams, as they continuously seek out new ways to leverage data for improved performance and user experience.

Comparing LLMOps and FMOps

While LLMOps and FMOps target different operational goals, they also share some similarities and notable differences. Understanding these can help organizations make informed decisions about which operational strategy to adopt.

Similarities Between LLMOps and FMOps

Both LLMOps and FMOps emphasize the importance of data quality and effective model training. They also rely heavily on automation within their workflows to ensure efficiency and reliability. Furthermore, both paradigms aim to improve AI systems continuously, ensuring that deployed models remain relevant and effective over time. This shared commitment to quality and automation often leads to the implementation of best practices in data governance, model versioning, and performance monitoring, which are crucial for maintaining the integrity of AI applications across various industries.

Moreover, both LLMOps and FMOps benefit from collaborative efforts among data scientists, engineers, and domain experts. This interdisciplinary approach fosters innovation and allows for the integration of diverse perspectives, which can enhance model performance and applicability. By leveraging shared insights and expertise, teams can identify potential pitfalls and opportunities for improvement, ultimately leading to more robust and effective AI solutions.

Differences Between LLMOps and FMOps

The primary difference between the two lies in their focus areas: LLMOps aims at managing large-scale language models with vast datasets, while FMOps zeroes in on optimizing smaller, specific fine-tuned models. Additionally, the tooling and architecture of LLMOps might require more robust computational resources due to the sheer size of the models involved in comparison to the lighter FMOps models. This difference in scale not only affects the infrastructure needed but also influences the types of algorithms and techniques that can be effectively employed in each context.

Furthermore, the deployment strategies for LLMOps and FMOps can vary significantly. LLMOps often necessitate a more complex orchestration of services to handle the intricacies of large model inference and real-time data processing. In contrast, FMOps can leverage simpler deployment pipelines, making them more agile and adaptable to rapid changes in user requirements or data inputs. This flexibility allows organizations utilizing FMOps to iterate quickly on their models, responding to feedback and evolving needs with greater speed and efficiency.

Choosing Between LLMOps and FMOps

Deciding whether to implement LLMOps or FMOps is a critical choice for organizations venturing into AI deployment. The following considerations can help guide this decision-making process.

Factors to Consider

- Organizational Needs: Clearly define the goals and requirements of implementing AI solutions.

- Available Resources: Assess computational resources and budget constraints.

- User Expectations: Consider the needs of end-users and the level of accuracy required for tasks.

Making the Right Decision for Your Business

Ultimately, the choice between LLMOps and FMOps should align with your organization’s strategic goals and operational capabilities. By thoroughly analyzing your specific needs, resources, and user expectations, you can opt for the approach that best suits your AI initiatives.

As the landscape of machine learning operations continues to evolve, understanding these frameworks is indispensable for organizations aiming to harness the full potential of AI technologies.

Your DevOps Guide: Essential Reads for Teams of All Sizes

Elevate Your Business with Premier DevOps Solutions. Stay ahead in the fast-paced world of technology with our professional DevOps services. Subscribe to learn how we can transform your business operations, enhance efficiency, and drive innovation.