Secure LLM Deployment: Key Considerations

The deployment of large language models (LLMs) is transforming industries and workflows, offering unprecedented capabilities in natural language understanding and generation. However, as organizations rush to harness their potential, ensuring the secure deployment of these models becomes paramount. This article explores the key considerations and essential practices for deploying LLMs securely, highlighting the importance of understanding LLM deployment, the vital security measures, and future trends.

Understanding LLM Deployment

Defining LLM Deployment

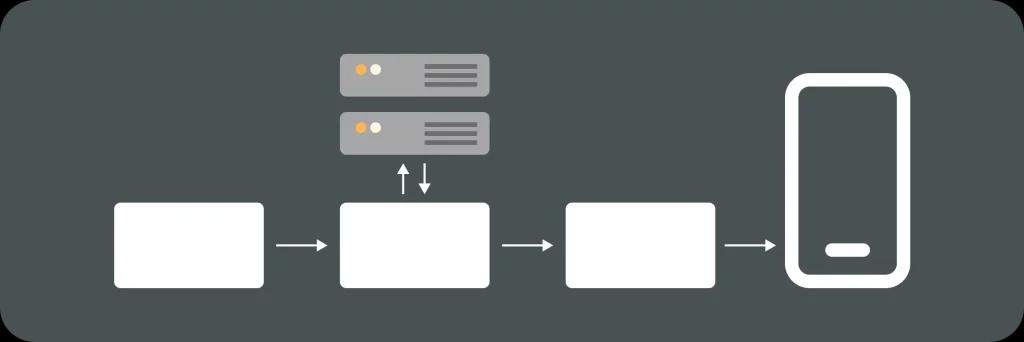

LLM deployment refers to the process of integrating large language models into applications or services, making them accessible for end-users. It encompasses several stages, including model selection, infrastructure setup, API integration, and ongoing maintenance. Each of these stages compounds the complexity of deployment, requiring careful planning and execution.

Furthermore, the choice of deployment environment—whether on-premises, in the cloud, or in a hybrid model—can significantly impact performance, security, and scalability. Organizations must evaluate their needs and capabilities to ensure they can effectively support the model throughout its lifecycle. For instance, cloud-based deployments often offer greater flexibility and scalability, allowing organizations to adjust resources based on demand. On the other hand, on-premises solutions may provide enhanced control over data security, which can be crucial for industries dealing with sensitive information.

Importance of LLM Deployment

The deployment of LLMs is critical because it allows organizations to leverage the model’s capabilities to automate tasks, enhance customer experiences, and drive innovative solutions. Proper deployment can lead to improvements in efficiency, productivity, and insights, providing businesses with a competitive edge.

However, without careful implementation and monitoring, organizations risk exposing themselves to various vulnerabilities, such as data breaches, model misuse, and compliance issues. Thus, understanding the nuances of LLM deployment is essential for harnessing its potential responsibly and securely. Additionally, organizations must consider the ethical implications of deploying LLMs, including biases present in training data and the potential for generating misleading or harmful content. Establishing robust guidelines and oversight mechanisms can help mitigate these risks, ensuring that LLMs are used in ways that align with organizational values and societal norms.

Moreover, the continuous evolution of LLM technology necessitates ongoing training and updates to the deployed models. As new data becomes available or as user needs change, organizations must be prepared to retrain their models to maintain relevance and accuracy. This iterative process not only enhances the model’s performance but also fosters a culture of innovation within the organization, encouraging teams to explore new applications and functionalities that can further leverage the power of language models.

Essential Elements of Secure LLM Deployment

Security Measures for LLM Deployment

Secure deployment of LLMs encompasses a series of robust security measures to protect the model, the data it processes, and the infrastructure supporting it. These measures include encryption of data at rest and in transit, access controls, and user authentication protocols.

Additionally, organizations should implement network security practices, such as firewalls and intrusion detection systems, to safeguard the deployment environment from external threats. Regular security audits and vulnerability assessments can further enhance security by identifying and mitigating risks proactively. Furthermore, employing secure coding practices during the development phase can help eliminate potential vulnerabilities before they become an issue in the deployment stage. This proactive approach not only protects sensitive data but also fosters a culture of security awareness within the development team.

Risk Management in LLM Deployment

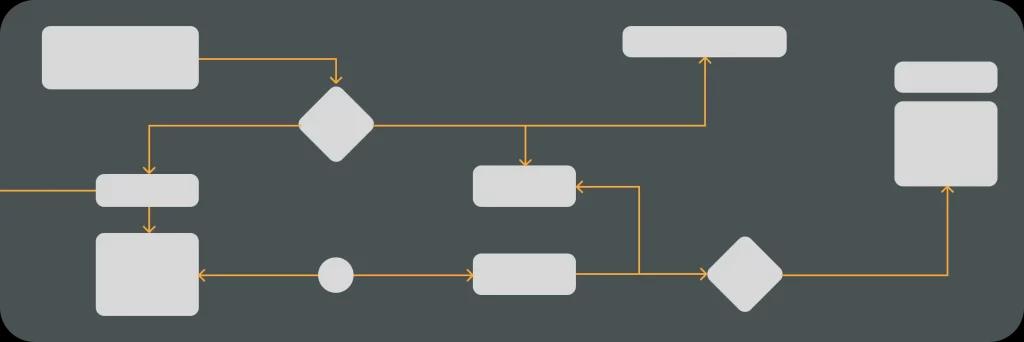

Risk management is integral to LLM deployment. Organizations should begin by conducting a comprehensive risk assessment to identify potential vulnerabilities associated with their specific deployment scenario. This includes evaluating data sensitivity, user access levels, and compliance obligations.

Once risks are identified, organizations should develop a risk management plan that outlines mitigation strategies. This could involve implementing additional security controls, establishing incident response protocols, and conducting training programs for staff to ensure they are aware of best practices for secure LLM use. Moreover, organizations should consider the importance of continuous monitoring and updating of their risk management strategies, as the landscape of threats is constantly evolving. By integrating real-time monitoring tools and analytics, organizations can swiftly adapt to new vulnerabilities and ensure that their LLM deployments remain secure over time. This dynamic approach not only enhances security but also builds trust among users and stakeholders, reinforcing the organization’s commitment to safeguarding sensitive information.

Key Considerations for Secure LLM Deployment

Evaluating Your LLM Deployment Needs

Before embarking on LLM deployment, organizations must thoroughly evaluate their specific needs. This involves understanding the business objectives that the model must fulfill, the types of data that will be processed, and the necessary performance metrics. Key considerations include scalability, latency requirements, and integration capabilities with existing systems.

Furthermore, organizations should assess their current technical infrastructure and whether it can support the demands of LLM deployment. Realistic resource allocation is crucial to ensure the deployment runs efficiently while meeting security standards. It is also essential to consider the potential for future growth; organizations should anticipate changes in data volume and user demand that may arise as the business evolves. This foresight can guide decisions on whether to opt for on-premises solutions or cloud-based services, each of which comes with its own set of advantages and challenges.

Choosing the Right Security Solutions for LLM Deployment

Choosing the right security solutions is vital to ensure the integrity of LLM deployment. Organizations should consider solutions that provide comprehensive protection, such as cloud security services, data loss prevention tools, and threat intelligence platforms.

Additionally, leveraging machine learning and artificial intelligence for security monitoring can enhance the organization’s ability to detect anomalies and respond to threats in real-time. Selecting security vendors with proven track records in AI-related solutions can also provide additional peace of mind. It is equally important to implement a multi-layered security approach that includes encryption, access controls, and regular security audits. By doing so, organizations can create a robust defense against potential vulnerabilities that may arise during the lifecycle of the LLM. Regular training and awareness programs for employees can further bolster security, ensuring that everyone involved understands the importance of safeguarding sensitive data and adhering to best practices in cybersecurity.

Challenges in Secure LLM Deployment

Common Obstacles in LLM Deployment

Despite the clear advantages of deploying LLMs, organizations face a range of challenges. Common obstacles include the complexity of model integration, the need for specialized skills, and the rapid pace of technological change. Ensuring that teams have the necessary expertise to deploy and manage LLMs effectively is critical.

Moreover, regulatory compliance poses additional challenges, as organizations must navigate a landscape of constantly evolving data privacy and security laws. Failing to comply can result in significant repercussions, highlighting the need for thorough planning and ongoing monitoring of compliance requirements. This can often mean investing in legal counsel or compliance officers who specialize in technology and data protection, which can strain resources, especially for smaller organizations.

Furthermore, the integration of LLMs into existing systems can be fraught with difficulties. Legacy systems may not be compatible with the latest technologies, requiring costly upgrades or even complete overhauls. This can lead to delays in deployment and increased operational costs, as organizations must balance the need for innovation with the realities of their current infrastructure. The challenge is not just technical; it also involves change management, as employees must adapt to new workflows and tools that come with LLM implementation.

Overcoming Security Challenges in LLM Deployment

Overcoming security challenges requires a proactive approach. Organizations should create a culture of security awareness, where all team members understand their role in safeguarding the LLM deployment. Regular training sessions and updates on new threats can help maintain a strong security posture. This culture can be fostered through gamified training programs that engage employees and make learning about security protocols more interactive and enjoyable.

Additionally, incorporating security into the development lifecycle—known as DevSecOps—can help address potential vulnerabilities early in the deployment process. This ensures that security measures are part of the design and not an afterthought, leading to more resilient deployment outcomes. Emphasizing automation in security testing can also streamline the process, allowing teams to identify and rectify vulnerabilities before they become critical issues. By integrating automated security checks and balances, organizations can not only enhance their security posture but also accelerate their deployment timelines, ultimately leading to a more efficient and secure LLM implementation.

Future of Secure LLM Deployment

Emerging Trends in LLM Deployment

As the landscape of LLM deployment continues to evolve, several emerging trends are reshaping the approach to secure deployments. One such trend is the increased use of federated learning, which allows models to be trained across decentralized devices while keeping data localized. This can enhance privacy and security, as sensitive data does not have to leave its original environment.

Another trend is the integration of explainable AI into LLMs, which helps developers and users understand how decisions are made by the model. This transparency can build trust and ensure compliance with regulatory expectations.

Predictions for Secure LLM Deployment

Looking ahead, the need for secure LLM deployment will only grow as organizations increasingly rely on these powerful models. Experts predict that enhanced security protocols, coupled with more sophisticated risk assessment frameworks, will become standard in LLM deployment practices.

Furthermore, as the awareness of AI-related risks escalates, regulatory bodies may introduce more stringent guidelines, prompting organizations to adopt even more robust security measures. The future of LLM deployment is therefore poised to be characterized by continuous innovation and a heightened focus on security.

In conclusion, navigating the complexities of secure LLM deployment requires a comprehensive understanding of both the technology and the associated security considerations. By focusing on detailed risk management, proper evaluation of deployment needs, and embracing emerging trends, organizations can effectively harness the benefits of LLMs while safeguarding their assets and data.

Your DevOps Guide: Essential Reads for Teams of All Sizes

Elevate Your Business with Premier DevOps Solutions. Stay ahead in the fast-paced world of technology with our professional DevOps services. Subscribe to learn how we can transform your business operations, enhance efficiency, and drive innovation.