What are Recurrent Neural Networks (RNNs)?

Recurrent Neural Networks (RNNs) are a type of neural network that is designed to process sequential data. They are particularly useful in tasks where the order of the inputs is important, such as language modeling, speech recognition, machine translation, and time series prediction. RNNs have gained significant attention in recent years due to their ability to capture temporal dependencies in data.

Understanding the Basics of Recurrent Neural Networks

Before diving into the details of RNNs, it is essential to have an understanding of the fundamentals of neural networks. Neural networks are a class of machine learning algorithms that are inspired by the structure and functioning of the human brain. They consist of interconnected nodes, called neurons, that work together to process and analyze data.

Neural networks are designed to mimic the way the human brain processes information. Just like the brain’s neurons communicate with each other through synapses, artificial neural networks use interconnected nodes to transmit and process data. This parallel distributed processing allows neural networks to handle complex tasks and learn patterns from large datasets.

The Concept Recurrent Neural Networks

At the core of neural networks is the concept of learning from data. They learn by adjusting the weights and biases of the connections between neurons based on the input data and the desired output. This process, known as training, allows neural networks to make predictions or classify new data.

Training a neural network involves presenting it with labeled examples and adjusting the parameters until the network’s output matches the desired output. This iterative process of forward propagation and back propagation helps the network improve its performance over time through learning from its mistakes.

The Different Types of Neural Networks

Neural networks can be classified into various types based on their architecture and the way they process data. The most common types include feedforward neural networks, convolutional neural networks, and recurrent neural networks. Each type has its strengths and weaknesses, making them suitable for different types of tasks.

Feedforward neural networks are the simplest form of neural networks, where information flows in one direction, from input to output. Convolutional neural networks are commonly used in image recognition tasks, where they excel at capturing spatial hierarchies. Recurrent neural networks, on the other hand, are designed to handle sequential data and have memory capabilities, making them ideal for tasks such as natural language processing and time series prediction.

Diving Deeper into Recurrent Neural Networks

Now let’s delve deeper into the unique features of Recurrent Neural Networks that set them apart from other types of neural networks.

The Unique Features of RNNs

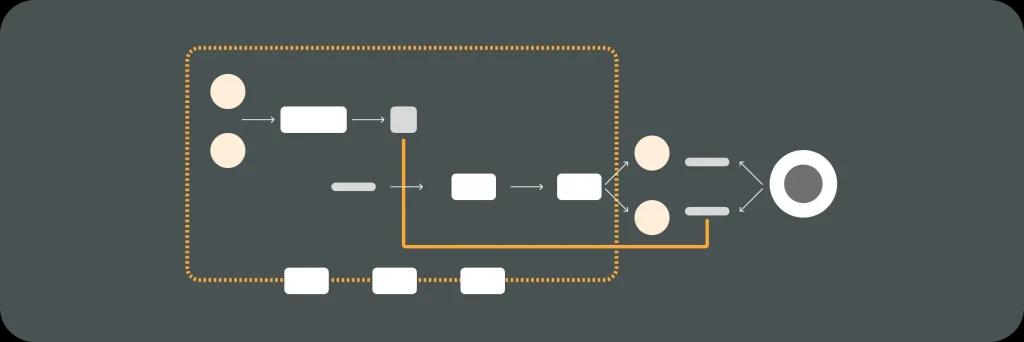

RNNs are designed to handle sequential data by incorporating feedback connections that allow information to flow from one step to another. This feedback loop enables RNNs to process data with temporal dependencies, making them well-suited for tasks involving sequences such as sentences, speech, and time series.

One key aspect of RNNs is their ability to exhibit dynamic temporal behavior. This means that the network’s state evolves over time as it processes input sequences, allowing it to capture patterns and dependencies that span across different time steps. This dynamic nature is what makes RNNs particularly powerful for tasks where understanding the context and order of data is crucial.

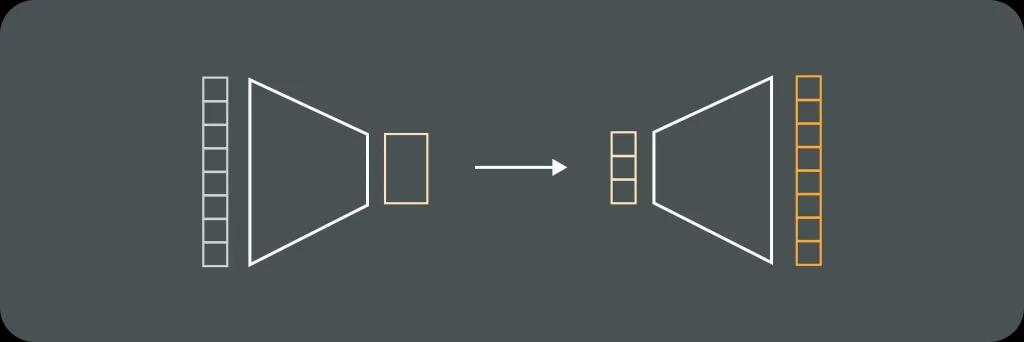

The Architecture of Recurrent Neural Networks

The architecture of an RNN consists of a hidden state that carries information from previous steps and is updated at each time step. This hidden state acts as the memory of the network, allowing it to retain information about the past. In addition to the hidden state, an RNN also has input and output layers that facilitate the processing of sequential data.

Moreover, RNNs can come in different variants such as Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU), which address the vanishing gradient problem and improve the network’s ability to capture long-range dependencies. These variants introduce additional gates and mechanisms that regulate the flow of information within the network, enhancing its capacity to learn and remember patterns in sequential data.

The Working Mechanism of RNNs

Understanding how RNNs work is crucial to grasp their functioning and capabilities.

Recurrent Neural Networks (RNNs) are a type of artificial neural network designed to handle sequential data by processing it in a step-by-step manner. RNNs are particularly well-suited for tasks such as natural language processing, time series prediction, and speech recognition due to their ability to retain memory of past inputs through their hidden states.

The Role of Time Steps in RNNs

RNNs process data in a sequential manner, one time step at a time. Each time step corresponds to an element in the input sequence, and the hidden state is updated at each step based on the current input and the previous hidden state. This iterative process allows RNNs to capture information from earlier steps and use it to influence predictions at later steps.

One of the key advantages of RNNs is their ability to handle inputs of variable length, unlike traditional feedforward neural networks. This flexibility makes RNNs well-suited for tasks where the input data is not fixed in size, such as processing sentences of different lengths in natural language processing tasks.

The Process of Training RNNs

Training RNNs involves adjusting the weights and biases in the network to minimize the difference between the predicted output and the desired output. This is typically done using an optimization algorithm such as gradient descent. The training process requires a large amount of labeled data to learn from and can be computationally intensive.

One of the challenges in training RNNs is the issue of vanishing or exploding gradients, which can occur when gradients either become too small to effectively update the weights or grow exponentially, leading to unstable training. Techniques such as gradient clipping and using specialized RNN architectures like Long Short-Term Memory (LSTM) networks or Gated Recurrent Units (GRUs) have been developed to mitigate these issues and improve the training stability of RNNs.

Applications of Recurrent Neural Networks

RNNs have found numerous applications in various fields due to their ability to handle sequential data effectively.

One key application of Recurrent Neural Networks (RNNs) is in the field of speech recognition. RNNs are used to transcribe spoken language into text, enabling virtual assistants like Siri and Alexa to understand and respond to user commands accurately. By processing audio input sequentially, RNNs can effectively capture the nuances of human speech and convert it into actionable text.

RNNs in Natural Language Processing

In natural language processing, RNNs have been extensively used for tasks such as language modeling, sentiment analysis, text generation, and machine translation. Their ability to capture long-range dependencies in language makes them well-suited for these tasks.

Another significant application of RNNs is in the field of autonomous driving. RNNs are utilized in self-driving cars to predict the movement of other vehicles on the road, anticipate pedestrian behavior, and make real-time decisions to ensure safe navigation. By analyzing sequential data from sensors such as cameras and lidar, RNNs help autonomous vehicles react swiftly to changing traffic conditions.

RNNs in Predictive Analytics

RNNs have also proven to be effective in predictive analytics, where they are used for time series forecasting, stock market prediction, and anomaly detection. Their ability to handle temporal data and capture patterns over time makes them particularly useful in these applications.

Furthermore, RNNs play a crucial role in the field of healthcare for tasks such as patient monitoring and disease prediction. By analyzing sequential medical data such as vital signs, test results, and patient histories, RNNs can assist healthcare professionals in identifying early warning signs of potential health issues, enabling timely intervention and personalized treatment plans.

The Limitations and Challenges of RNNs

While RNNs have shown great promise in various tasks, they also have their limitations and face certain challenges.

Understanding the Problem of Long-Term Dependencies

One of the major challenges with RNNs is capturing long-term dependencies in data. As the length of the sequence increases, RNNs tend to suffer from the vanishing gradient problem, where the gradients become extremely small, making it difficult for the network to learn and update the weights effectively.

Long-term dependencies are crucial in tasks such as language modeling, where the context of a word can depend on words that appear far back in the sequence. Traditional RNNs struggle to capture these long-range dependencies due to their inherent sequential nature, leading to a loss of information over time.

Overcoming Challenges in RNNs

Researchers have proposed several solutions to overcome the challenges faced by RNNs, such as using Gated Recurrent Units (GRUs) or Long Short-Term Memory (LSTM) units. These mechanisms allow RNNs to retain and update information over longer sequences and alleviate the vanishing gradient problem.

GRUs and LSTMs incorporate gating mechanisms that regulate the flow of information within the network, enabling better long-term memory retention. By selectively updating information based on the input, these architectures address the limitations of traditional RNNs and improve their ability to model dependencies in sequential data.

In conclusion, Recurrent Neural Networks (RNNs) are a powerful type of neural network that excel at processing sequential data. They have found numerous applications in various fields and continue to be an active area of research. Despite their limitations, ongoing advancements in RNN architectures and training techniques hold great potential for further improving their performance and applicability in real-world scenarios.

Your DevOps Guide: Essential Reads for Teams of All Sizes

Elevate Your Business with Premier DevOps Solutions. Stay ahead in the fast-paced world of technology with our professional DevOps services. Subscribe to learn how we can transform your business operations, enhance efficiency, and drive innovation.