Building LLMOps Pipelines on AWS

The integration of machine learning operations, known as LLMOps, into business processes is becoming increasingly vital. As organizations strive to leverage large language models (LLMs) more efficiently, understanding how to build and manage LLMOps pipelines on robust cloud platforms like AWS becomes essential. This article will explore the intricacies of creating these pipelines, diving into their definition, importance, and the steps involved in setting them up using AWS.

Understanding LLMOps Pipelines

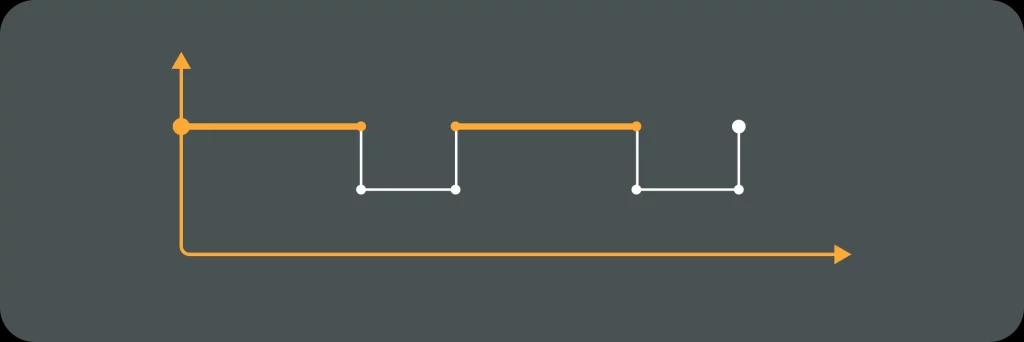

LLMOps pipelines refer to the workflow and processes that support the deployment, monitoring, and management of large language models in production. They encompass various stages, from data collection and preprocessing to model deployment and performance evaluation.

Definition of LLMOps Pipelines

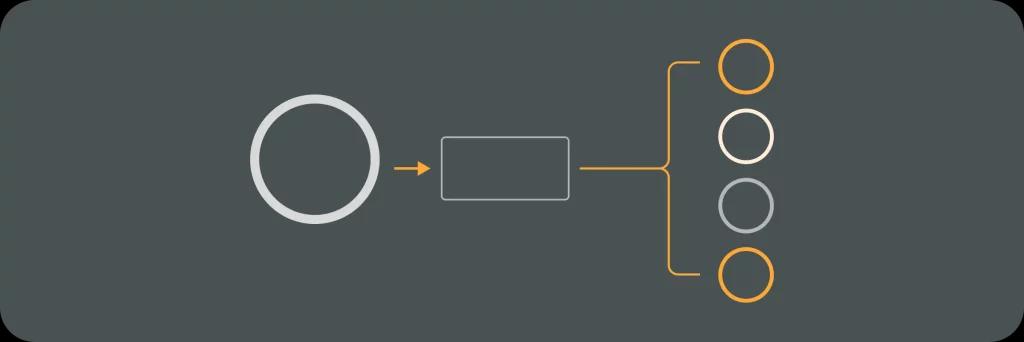

At its core, an LLMOps pipeline is a structured framework that enhances the workflow of developing and deploying machine learning models. It integrates machine learning model development with the operational aspects necessary to run these models at scale. An effective LLMOps pipeline typically consists of several stages, including data management, model training, validation, and deployment.

These components work together to ensure that models not only perform well under test conditions but also continue to do so when they are exposed to new data in real-world scenarios. The pipeline also incorporates feedback loops from the monitoring phase, allowing for continuous improvement and adaptation of the models based on their performance metrics and user interactions.

Importance of LLMOps Pipelines in Machine Learning

The significance of LLMOps pipelines cannot be overstated. With organizations increasingly relying on AI and machine learning solutions, the need for streamlined processes that promote efficient model deployment and management grows. LLMOps pipelines enable teams to maintain clarity and structure in their workflows, thus minimizing errors and ensuring consistency across model iterations.

Moreover, they facilitate faster iteration times and smoother collaboration between data scientists and engineering teams, bolstering an organization’s overall agility in responding to market changes and technological advancements. By standardizing processes and utilizing automation tools, LLMOps pipelines also help in reducing the manual workload, allowing teams to focus on more strategic tasks such as model innovation and feature enhancement.

In addition, the implementation of robust monitoring systems within LLMOps pipelines is crucial for tracking model performance over time. These systems can alert teams to any degradation in model accuracy or shifts in data distribution, enabling proactive adjustments. This level of vigilance not only enhances the reliability of deployed models but also builds trust with stakeholders who depend on accurate and timely insights generated by these AI systems.

AWS and its Role in LLMOps Pipelines

Amazon Web Services (AWS) provides a comprehensive suite of tools and services that are well-suited for building robust LLMOps pipelines. From data storage solutions to machine learning model deployment options, AWS equips users with the necessary resources to take their LLMOps processes to the next level.

Overview of AWS Services

AWS offers several services that can be instrumental in the development of LLMOps pipelines. Key services include Amazon S3 for data storage, Amazon SageMaker for model training and deployment, and AWS Lambda for serverless computing capabilities. Additionally, AWS provides various data processing services such as AWS Glue for data preparation and AWS Step Functions for orchestrating workflows.

These services can be easily integrated, allowing organizations to build scalable and efficient pipelines that can handle the complexities of working with large language models. For instance, Amazon SageMaker not only supports the training of models but also facilitates hyperparameter tuning and provides built-in algorithms that can be directly applied to datasets. This streamlining of processes reduces the time from development to deployment, enabling teams to iterate quickly and effectively.

Benefits of Using AWS for LLMOps Pipelines

Utilizing AWS for LLMOps pipelines comes with a myriad of advantages. Firstly, AWS offers flexibility in terms of resource scaling, enabling users to adjust their computational power as needed based on demand.

Secondly, the pay-as-you-go pricing model means organizations only pay for what they use, significantly reducing upfront investment costs. Additionally, the availability of numerous built-in security features allows businesses to manage their models and data securely, safeguarding sensitive information from potential breaches. Furthermore, AWS provides a rich ecosystem of machine learning tools, including pre-trained models and frameworks such as TensorFlow and PyTorch, which can be easily deployed on AWS infrastructure. This not only accelerates the development process but also allows teams to leverage the latest advancements in AI research without the need for extensive in-house expertise.

Moreover, the global infrastructure of AWS ensures low-latency access to data and services, which is crucial for applications that require real-time processing. The ability to deploy models across multiple regions can enhance performance and reliability, making it easier for organizations to serve a global user base. With AWS’s commitment to continuous innovation, users can also benefit from regular updates and new features that enhance their LLMOps capabilities, keeping them at the forefront of technology in the rapidly evolving field of machine learning.

Steps to Build LLMOps Pipelines on AWS

Building an effective LLMOps pipeline on AWS involves careful planning and execution. Understanding the steps involved in this process is crucial for success.

Planning and Designing the Pipeline

The first step in developing an LLMOps pipeline is to strategically plan and design the workflow. This phase should begin with defining the objectives, key performance indicators, and the scope of the project. Identifying the data sources and determining how to preprocess and store the data is also essential at this stage.

Collaboration with stakeholders ensures that the pipeline meets business needs while also maintaining a focus on technical feasibility. Visualization tools can help outline the pipeline’s architecture, providing clarity on how components will interact. Engaging with data scientists, engineers, and business analysts during this phase can yield insights into potential challenges and opportunities, leading to a more robust design. Additionally, considering scalability from the outset allows the pipeline to adapt to increasing data volumes and complexity over time, which is critical in the fast-evolving landscape of machine learning.

Setting Up the AWS Environment

Once the planning stage is complete, the next step is to set up the AWS environment. This includes creating an AWS account and configuring the necessary services like Amazon S3 for storage, Amazon SageMaker for ML model management, and AWS IAM for managing access permissions.

It is important to adhere to best practices in this step, such as enabling encryption for data at rest and in transit, to safeguard sensitive information. Properly configuring these services will lay a solid foundation for the pipeline. Furthermore, leveraging AWS CloudFormation can streamline the process of infrastructure deployment, allowing for version control and easy replication of the environment. Monitoring tools such as Amazon CloudWatch should also be configured to track the performance and health of the pipeline, providing real-time insights that can inform adjustments and optimizations.

Implementing the LLMOps Pipeline

With the AWS environment in place, the next phase is the implementation of the LLMOps pipeline. This involves integrating various services and ensuring data flows seamlessly through the designed architecture. Data ingestion processes need to be established to ensure that updated data sets are available for model training.

Moreover, continuous integration and continuous deployment (CI/CD) practices can be implemented to automate the testing and deployment of models, ensuring rapid iterations and improvements based on received feedback. Incorporating version control systems, such as Git, into the pipeline can enhance collaboration among team members and maintain a history of changes made to models and code. Additionally, implementing robust logging and monitoring practices will help in diagnosing issues quickly, facilitating a smoother operational flow and minimizing downtime. This proactive approach not only enhances the reliability of the pipeline but also fosters a culture of continuous improvement within the team.

Managing and Optimizing LLMOps Pipelines on AWS

After deploying an LLMOps pipeline, managing and optimizing its performance is crucial to ensure its continued effectiveness. This phase involves monitoring, troubleshooting, and refining the pipeline’s operations continuously.

Monitoring the LLMOps Pipelines

Monitoring tools are essential for tracking the performance of LLMOps pipelines. AWS CloudWatch offers resources for logging and extracting metrics, allowing teams to spot any performance bottlenecks efficiently. Utilizing these insights, teams can take proactive measures to address issues before they escalate.

Regularly analyzing model performance against predetermined KPIs further reinforces efficiency and effectiveness in the real-world application of LLMOps.

Troubleshooting Common Issues

Despite the best planning and execution, pipelines may encounter challenges. Common issues include data quality problems and model drift. Incorporating automated alerts for data anomalies can help teams respond swiftly.

Moreover, developing a robust debugging framework enables rapid identification and resolution of any issues that arise, maintaining the integrity of the pipeline.

Optimizing the Performance of LLMOps Pipelines

Optimization should be an ongoing effort, focusing on efficiency and speed. This might involve refining model hyperparameters, retraining models with updated datasets, or configuring AWS resources to reduce compute costs.

Regular assessments and benchmarking against industry standards can identify areas for improvement, ensuring that the LLMOps pipelines deliver the best possible outcomes.

Security Considerations for LLMOps Pipelines on AWS

Security is an integral aspect of developing LLMOps pipelines. Given the sensitive nature of data used in machine learning, implementing robust security measures is crucial to protect against data breaches and unauthorized access.

AWS Security Features for LLMOps Pipelines

AWS offers a comprehensive suite of security features that can help protect LLMOps pipelines. Services such as AWS Identity and Access Management (IAM) allow organizations to control access to resources effectively, ensuring that only authorized personnel can manipulate sensitive data and models.

Additionally, AWS provides encryption capabilities, both at rest and in transit, safeguarding data from potential threats. Regular security audits and compliance checks further enhance the security posture of LLMOps pipelines on AWS.

Best Practices for Securing LLMOps Pipelines

To maintain a secure environment, adhering to best practices is essential. This includes employing a principle of least privilege by limiting user permissions based on necessity, implementing regular security training for staff, and keeping all software and frameworks updated to protect against vulnerabilities.

Furthermore, documenting security protocols and conducting routine reviews can help identify weaknesses, ensuring an organization remains vigilant against emerging threats.

In conclusion, building LLMOps pipelines on AWS offers a structured approach to managing large language models efficiently. By understanding the components involved, leveraging AWS services, and focusing on security measures, organizations can create robust pipelines that drive significant value in their machine learning initiatives.

Your DevOps Guide: Essential Reads for Teams of All Sizes

Elevate Your Business with Premier DevOps Solutions. Stay ahead in the fast-paced world of technology with our professional DevOps services. Subscribe to learn how we can transform your business operations, enhance efficiency, and drive innovation.