DevOps Definitions: Container Runtime

In the world of DevOps, containerization has become a popular approach for managing applications and their dependencies. Among the key components of containerization is the container runtime. In this article, we will explore the basics of container runtime, its different types, its architecture, and the benefits it brings to DevOps practices.

Understanding the Basics of Container Runtime

Before diving into the details, let’s start by defining what exactly container runtime is. In simple terms, container runtime refers to the software responsible for running and managing containers. It creates an environment where containers can execute and interact with the underlying system resources.

The primary role of container runtime is to enable the execution and management of containers, allowing them to be deployed across different infrastructure environments seamlessly. It abstracts the complexities of interacting with the host system, providing a consistent and isolated environment for containers.

What is Container Runtime?

Container runtime serves as the bridge between container images, which contain the application code and its dependencies, and the underlying operating system and hardware. It ensures that containers have access to the necessary resources, such as CPU, memory, and storage, while also managing networking and security aspects.

The Role of Container Runtime in DevOps

Container runtime plays a crucial role in enabling the adoption of DevOps practices. By providing a standardized and portable environment, it facilitates the seamless deployment and scaling of applications. It allows DevOps teams to focus on developing and delivering software, without worrying about the intricacies of the underlying infrastructure.

Furthermore, container runtime offers additional benefits to DevOps teams. One of the key advantages is the ability to easily replicate and distribute containers across different environments. This allows for consistent testing and deployment, ensuring that applications behave consistently regardless of the underlying infrastructure.

In addition, container runtime provides enhanced security measures. It isolates containers from each other and the host system, preventing any potential vulnerabilities from spreading. This isolation also enables fine-grained control over resource allocation, ensuring that containers do not consume more resources than allocated, leading to better resource utilization.

Different Types of Container Runtime

There are several container runtime options available in the market, each with its own strengths and features. Let’s explore some of the most popular ones:

Docker: The Most Common Container Runtime

Docker is undoubtedly the most widely used container runtime. It provides a comprehensive solution for building, packaging, and distributing containers. Docker’s ease of use, extensive ecosystem, and strong community support make it a preferred choice for many organizations.

Containerd: A Lightweight Option

Containerd is a lightweight container runtime that focuses on providing core container functionalities. It removes some of the features and complexities present in Docker, resulting in a more streamlined and minimalistic runtime. Containerd is often used as a building block for higher-level container platforms.

CRI-O: Designed for Kubernetes

CRI-O is specifically designed to work seamlessly with Kubernetes, the leading container orchestration platform. It aims to provide a runtime optimized for Kubernetes environments, focusing on performance and security. CRI-O adheres strictly to the Kubernetes Container Runtime Interface (CRI) standards.

Aside from Docker, Containerd, and CRI-O, there are other notable container runtimes worth mentioning. One such runtime is rkt, developed by CoreOS. Rkt is known for its security-focused design and simplicity. It follows the principle of “Do One Thing and Do It Well,” making it an attractive option for those who prioritize security and simplicity in their container environments.

Another interesting container runtime is LXD, which stands for Linux Container Daemon. LXD is a system container manager that provides a more traditional virtual machine-like experience. It offers a full Linux system inside a container, allowing users to run multiple applications or services within a single container. LXD is often used in scenarios where the isolation of a virtual machine is desired, but with the efficiency and performance benefits of containers.

The Architecture of Container Runtime

Understanding the architecture of container runtime is essential for gaining a deeper understanding of its inner workings. Let’s explore the key components that make up the container runtime:

Understanding the Container Runtime Interface

The Container Runtime Interface (CRI) defines the API between Kubernetes and the container runtime. It serves as the contract that allows Kubernetes to interact with different container runtimes seamlessly. By adhering to the CRI standards, container runtimes ensure compatibility with Kubernetes, enabling smooth integration.

The Role of the Image Service

The image service handles the management and distribution of container images. It is responsible for pulling, pushing, and storing container images in a repository. The container runtime relies on the image service to access the required images during container deployment.

The Function of the Runtime Service

The runtime service is responsible for the execution and management of containers. It interacts with the underlying operating system to create and manage container processes, allocate resources, and handle container lifecycle events.

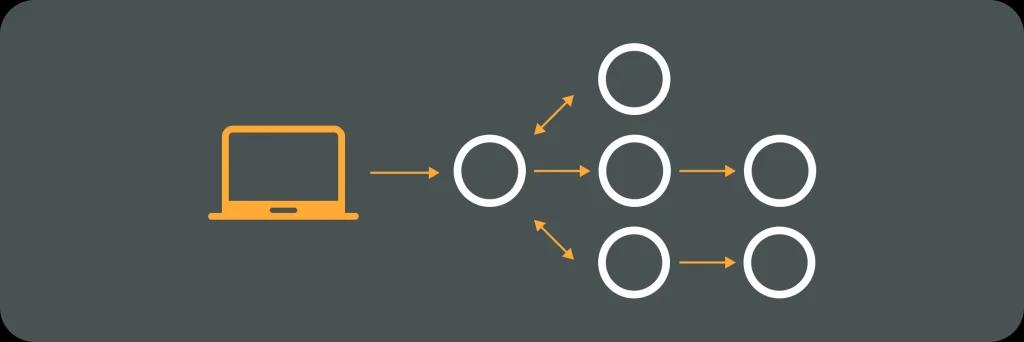

Now, let’s delve deeper into the architecture of the container runtime. At its core, the container runtime consists of several layers that work together to enable the efficient execution of containers.

The first layer is the container engine, which provides the foundation for running containers. It is responsible for creating and managing the container environment, including isolating resources and providing networking capabilities. The container engine ensures that each container runs in its own isolated environment, preventing interference between containers.

On top of the container engine, we have the container orchestration layer. This layer is responsible for managing and coordinating the deployment of containers across a cluster of machines. It ensures that containers are scheduled to run on appropriate nodes, taking into account resource availability and constraints. The container orchestration layer also handles scaling, load balancing, and fault tolerance, ensuring that containers are running efficiently and reliably.

Another important component of the container runtime architecture is the container registry. This is where container images are stored and can be accessed by the image service. The container registry acts as a centralized repository for container images, allowing developers to easily share and distribute their containers. It also provides versioning and security features, ensuring that only authorized users can access and deploy container images.

Lastly, we have the container runtime management layer. This layer is responsible for monitoring and managing the overall health and performance of the container runtime environment. It collects and analyzes metrics, logs, and events from the container runtime components, providing insights into the runtime’s behavior and enabling proactive management and troubleshooting.

By understanding the various layers and components of the container runtime architecture, we can appreciate the complexity and sophistication behind the scenes. This knowledge empowers us to effectively leverage container runtimes and build robust and scalable containerized applications.

The Benefits of Using Container Runtime in DevOps

Now, let’s explore the benefits that container runtime brings to DevOps practices:

Improved Scalability and Flexibility

Container runtime enables easy scalability and flexibility. DevOps teams can efficiently deploy and scale applications using container orchestration platforms like Kubernetes. Containers provide a lightweight and isolated environment, allowing applications to be easily replicated and scaled up or down based on demand.

Imagine a scenario where a popular e-commerce website experiences a sudden surge in traffic due to a flash sale. With container runtime, the DevOps team can quickly respond to this spike in demand by effortlessly scaling up the application. By spinning up additional containers, the website can handle the increased traffic without any hiccups. This seamless scalability ensures a smooth user experience and prevents any potential loss in revenue.

Enhanced Isolation and Security

Container runtime facilitates strong isolation between applications and the underlying host system. Each container operates in its own isolated environment, preventing interference from other containers. This isolation also enhances security by minimizing the attack surface and reducing the impact of vulnerabilities.

Consider a scenario where a DevOps team is responsible for deploying multiple microservices within a larger application. With container runtime, each microservice can be encapsulated within its own container, ensuring that any issues or vulnerabilities within one microservice do not affect the others. This isolation provides an added layer of security, as any potential breaches are contained within the specific container, limiting the impact on the overall application.

Faster Deployment Times

With container runtime, deploying applications becomes faster and more efficient. Containers can be swiftly provisioned, as they only require the necessary dependencies to run, rather than the entire operating system. By eliminating the need for full virtual machines, container runtime significantly reduces deployment time, allowing DevOps teams to deliver software quicker.

Imagine a scenario where a DevOps team is tasked with deploying a complex application that consists of multiple components. Traditionally, this process would involve setting up and configuring individual virtual machines for each component, which could be time-consuming and prone to errors. However, with container runtime, the team can package each component into a container, complete with all its dependencies. This streamlined approach drastically reduces deployment time, enabling the team to meet tight deadlines and deliver software faster.

In conclusion, container runtime is a vital component in the DevOps toolchain. By providing a standardized and portable environment, it enables seamless application deployment, scalability, and security. Understanding the basics, different types, and architecture of container runtime equips DevOps professionals to make informed decisions when adopting containerization practices.

Your DevOps Guide: Essential Reads for Teams of All Sizes

Elevate Your Business with Premier DevOps Solutions. Stay ahead in the fast-paced world of technology with our professional DevOps services. Subscribe to learn how we can transform your business operations, enhance efficiency, and drive innovation.